While it is early days, and innovation is important, hyperscalers cannot afford to keep throwing money away forever. They need to work out how AI will earn money, and that relies on inference.

For some time, I have been intrigued by the amount of money being spent on model development and AI training compared to the investment in inference. Models are an enabler, and every new model is attempting to open up some new capability, some new application. That’s all good, but training is a huge cost that must be monetized for a long-term healthy industry. The only way to do that is with inference. Inference adds value, and in some cases that can be turned into money. But today, the economics do not line up. AI is a money loser because the rapid pace of model innovation means there is never enough time to monetize a model before a newer, and supposedly better, model comes out.

Many of these ideas were expressed by Michaela Blott, senior fellow at AMD Research, during her keynote at this year’s Design Automation Conference. I would like to capture just a few of the concepts she discussed.

“Firstly, AI is an empirical science, so it’s not fundamentally understood,” Blott said. “That means we run tons of experiments. We try to observe patterns. We try to derive scaling laws. But we don’t fundamentally understand it. And secondly, AI research is generating an information bandwidth that is just truly beyond what individuals can observe. For example, there are more AI research papers than I can ever digest in a day. By the end of the day, what most of us do is follow our favorite AI researchers whom we trust, and they all have different viewpoints. That leads to different belief systems, with bulls and bears, and that’s particularly evident in this whole discussion around artificial general intelligence (AGI).

“The bulls say AGI is possible through scaling models. The bears, on the other hand, have a very different perspective. They say scaling AI through exponentially growing computer data is not really making the model smarter. They just memorize more. But they don’t really get the capability to generalize, to drive the concepts. Reasoning models are a real step change, but they can still catastrophically fail on simpler tasks. They lack fundamental understanding. There are still a lot of algorithmic challenges.

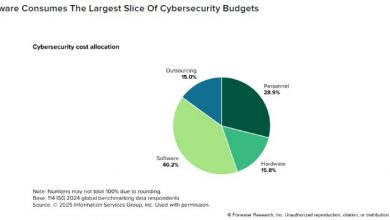

“[In the chart on the left,] you have intelligence, model performance on the y axis, and years on the x axis. Models are getting better and better. But how long will it continue? We all know this is not going to go on forever. It’s like a Moore’s log chart 10 years ago. And there’s already early research demonstrating some of the limits already. [In the chart on the right,] you have correctness on the y axis — higher is better — and x axis is the number of required steps in the reasoning process. Think of it as the number of chess moves you would take on average, 40 moves until you win, or maybe you’re better than that. The different colors reflect different models. You have standard LLMs, which are gray and light blue. They hover just along the x axis. They’re really poor reasoning models. Way better [are those] in orange and red. But look at what happens with the number of steps. The model performance almost linearly tanks. Reasoning models are great, and they’re so much better than standard LLMs. They scale. But they are limited by the number of reasoning steps.”

Blott moved on to talk about the fairness of models and how there is a danger that they are feeding on themselves at this point. That leads to a decline in the usefulness of them. “The next generation large language model trains on previously published benchmarks. That is their training data, so they basically just memorize the answers rather than understanding how to solve problems. It’s cheating. Furthermore, the more capable the models get, the harder it is to evaluate.”

There is also a decline in the amount of new data being made available, perhaps meaning that researchers are forced to look at multi-modal sources, such as audio and video. At this point, she changed focus and started to talk about the economics of AI.

“Beyond the algorithmic challenges, there are also economic challenges, which ultimately translate into technology challenges, and that’s where it gets really interesting. Revenue is lagging behind. One of the root causes is the high inference cost. There is this race to the bottom for inference pricing. There is a very short time window available to amortize the large R&D cost.

“GPT4 was estimated to have cost between $50 and $100 million to train. Now you release it, and as soon as there is a better model available, you’re going to have trouble monetizing it. The AI revolution, if you will, is to lower the inference cost.”

“Compute on the x axis, model performance on the y axis, but the x axis is logarithmic, and that means exponentially more compute for a linear step change in intelligence.”

Both the models and inference have to become rightsized for a given application. “Quantization and sparsity are a massive lever. The biggest advantages will come from architectural innovations. We build custom inference accelerators where the hardware architecture is tailored to the specifics of a neural network for a specific use case, so the hardware mimics the topology. Every layer is present. We allocate the appropriate amount of compute resources.”

Blott introduced several concepts, such as hardware mimicking data flow, having solutions with exactly the right amount of parallelism, matrix multiplies the right size, and how these combined can get you close to 100% efficiency. “There’s a whopping 30X between FP32 and FP4. We basically just adapt the algorithm to use low precision for significant energy efficiency while meeting the minimum accuracy target. Model accuracy depends on a data set, which is fixed. It depends on the data type, which is going to give you diminishing returns, and it depends on your quantization technique. They have improved so much that the latest post-training quantization techniques are brilliant. And finally, there’s sparsity, and neural networks are very sparse. But sparse doesn’t map very well to matrix or vector-based compute units. You’re going to be very inefficient. But again, with data flow architectures, once you unroll your neural network, you can sparse and trim individual connections, so that makes it way more efficient.”

We have seen many companies follow similar steps to making inference solutions efficient, but that is only one half of the challenge. “But the problem is agility. The pace of innovation is accelerating. The time window that we have to customize the circuit is basically shrinking down to nothing. We built end-to-end tool flows within our projects. This is really automating quantization, sparsity, data flow generation, and training. What you get out of this is a net list which you can run on an FPGA.”

Blott believes that what is needed is a productivity booster, being able to customize and optimize for specific workloads instead of building more general-purpose devices. “The biggest wave is yet to come, and that’s in the embedded space. This is where and how AI is going to go truly pervasive. Center stage is going to be the inference optimizations that will get us to revenue-generating AI deployment, and that will be the factor that will enable the AI revolution.”

Where do these tools come from? “Design automation today is more important than it ever was. I believe that to keep up with the rate of innovation, to provide the necessary agility, leveraging AI becomes a necessity in the future.”

While AI may be creating the problem, making problem development move faster than solution creation, Blott believes using AI to solve that problem is the best approach. In my opinion, model development needs to become more rationalized and see that small advances are no longer worth the enormous cost in some cases. Pursuing a single line of advancement without looking for other possibilities will lead to sub-optimal solutions in the future.