As we dive into 2025, GPU rendering has become the cornerstone of modern visual computing, powering everything from blockbuster games to intricate 3D models in design studios. Whether you’re a game developer optimizing for frame rates or a 3D artist pushing the boundaries of realism, understanding GPU rendering and its related techniques is essential. In this comprehensive guide, we’ll explore the fundamentals, advanced methods, and latest innovations in graphics rendering, real-time rendering, ray tracing, and shader programming. Backed by expert insights and up-to-date benchmarks, this article will equip you with the knowledge to elevate your projects and stay ahead in a rapidly evolving field.

What is GPU Rendering and Why It Matters in 2025

GPU rendering refers to the process of using a Graphics Processing Unit (GPU) to generate images, animations, or videos from 3D models. Unlike traditional CPU rendering, which handles tasks sequentially, GPUs excel at parallel processing, making them ideal for complex calculations like shading, texturing, and lighting. This hardware-accelerated approach has revolutionized industries, enabling faster render times and higher-quality outputs.

In 2025, GPU rendering is more accessible than ever, thanks to advancements in hardware from NVIDIA, AMD, and Intel. For instance, tools like Blender and Unreal Engine leverage GPU capabilities to deliver photorealistic results in real-time. But why choose GPU over CPU? GPUs can process thousands of threads simultaneously, reducing render times from hours to minutes for large scenes. Pros include superior speed and efficiency for visual effects, while cons involve higher power consumption and the need for compatible software.

Best GPUs for Rendering in 2025

Selecting the right GPU is crucial for optimal performance. Based on recent benchmarks, here are top recommendations:

- NVIDIA GeForce RTX 5090: The flagship for enthusiasts, offering unmatched ray tracing and AI acceleration. Ideal for high-end 3D modeling in Blender or Cinema 4D.

- AMD Radeon RX 9070 XT: Best all-around value, with strong multi-threaded performance for rendering tasks. Priced at around $709, it’s a favorite for game developers.

- NVIDIA GeForce RTX 5080: Balances cost and power, excelling in 4K rendering with DLSS support.

| GPU Model | Key Features | Best For | Approximate Price |

|---|---|---|---|

| RTX 5090 | 32GB GDDR7, Advanced RT Cores | Professional Rendering | $1,599 |

| RX 9070 XT | 16GB GDDR6, FSR 3 Upscaling | Gaming & 3D Design | $709 |

| RTX 5080 | 16GB GDDR7, AI-Enhanced Tools | Mid-Range Workflows | $999 |

For stability in applications like Blender, NVIDIA remains the go-to choice due to better integration and ray tracing support. Always benchmark your specific workflow—tools like UL Benchmarks can help compare real-world performance.

Fundamentals of Graphics Rendering: From Pixels to Photorealism

Graphics rendering is the broader process of converting data into visual images, encompassing both 2D and 3D elements. It involves techniques like rasterization, where 3D models are broken down into pixels, and vector graphics for scalable designs.

The evolution of graphics rendering traces back to the 1960s with pioneers like Ivan Sutherland’s Sketchpad, which laid the groundwork for interactive computer graphics. By the 1980s, physically based rendering emerged, simulating real-world light behavior for more accurate visuals. Today, in 2025, we’ve progressed from basic wireframes to hyper-realistic environments, driven by GPU advancements.

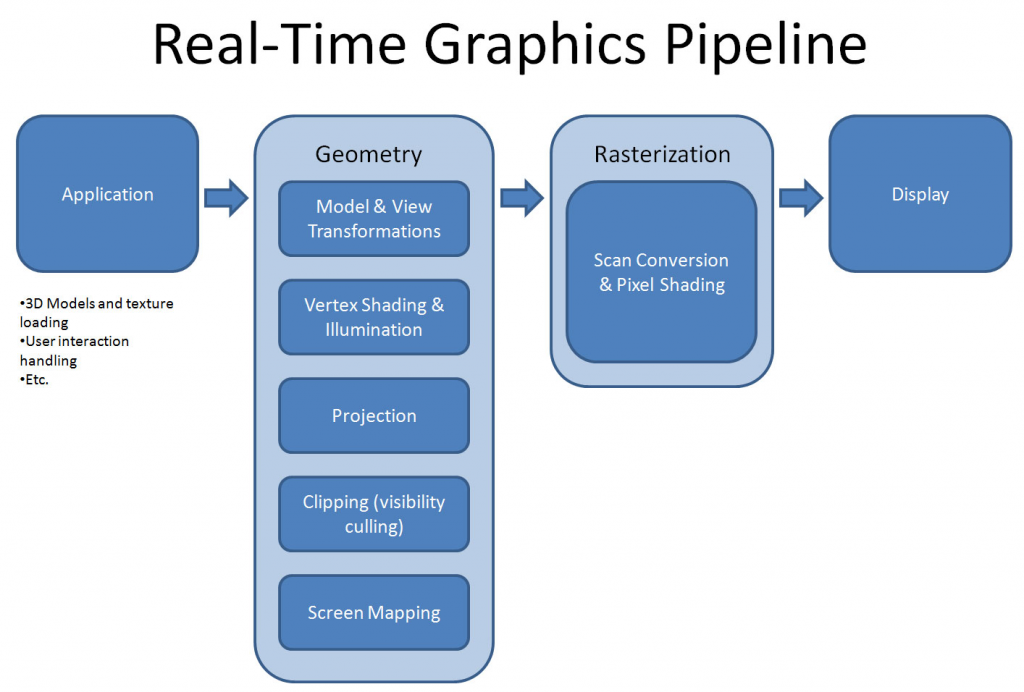

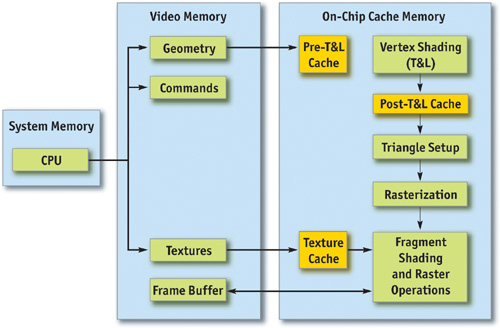

Rendering Pipelines Explained

A typical graphics rendering pipeline includes stages like geometry processing, vertex shading, and pixel shading. In tools like Unity or Maya, this pipeline ensures smooth transformations from model to screen. For beginners, start with basics: Load a 3D model, apply textures, and illuminate with lighting models like Phong or Lambertian.

Key sub-themes:

Lighting Models: From simple ambient lighting to advanced global illumination.

Rasterization vs. Ray Tracing: Rasterization is faster for real-time apps, while ray tracing adds realism.

Real-Time Rendering: Speed and Interactivity in 2025

Real-time rendering focuses on generating images at high speeds—typically 30-60 frames per second—for interactive applications like games, VR, and simulations. In 2025, trends emphasize AI integration and cloud-based processing for even faster outputs.

Trends and Optimizations

This year, real-time rendering is transforming archviz and gaming with tools like Unreal Engine 5’s Nanite for massive geometry handling. Key trends include:

- AI-Powered Optimization: Smart render farms use AI to predict and accelerate scenes.

- VR/AR Integration: Immersive experiences demand low-latency rendering, with engines like Twinmotion leading the way.

- Mobile Optimization: Techniques like anti-aliasing and frame rate capping ensure smooth performance on devices.

For mobile devs, focus on Unity’s optimizations: Reduce draw calls and use level-of-detail (LOD) systems to maintain 60 FPS.

Challenges include balancing quality with speed—overcome them by profiling tools in engines like Unreal.

Future outlook: By 2026, expect full AI-driven real-time photorealism in consumer apps.

Ray Tracing: Achieving Photorealism in Gaming and Film

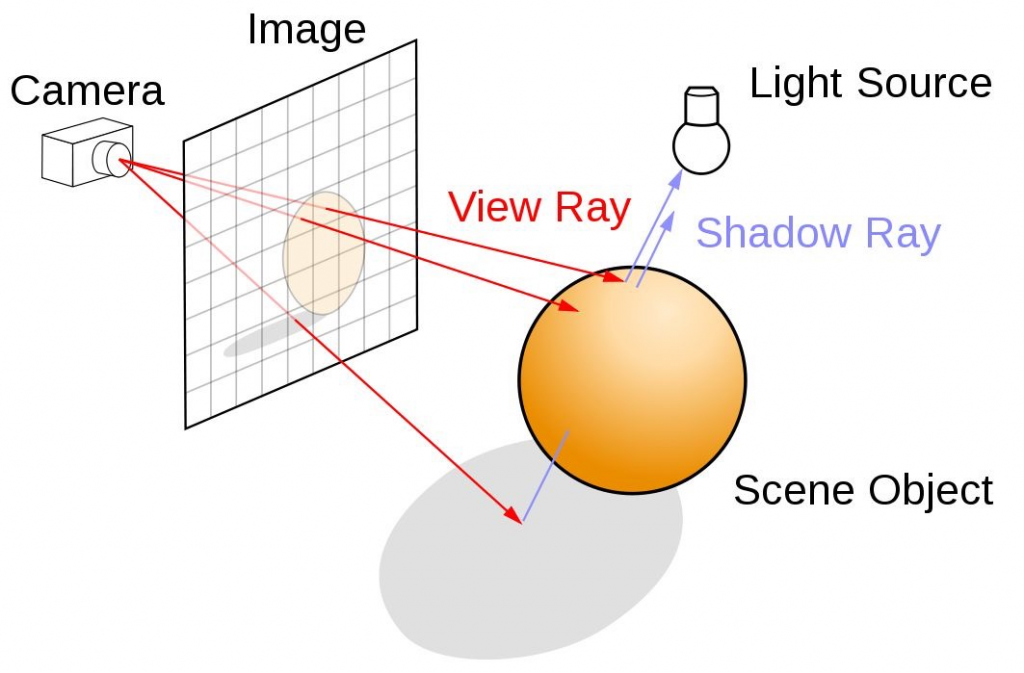

Ray tracing simulates light rays to create realistic reflections, refractions, and shadows, often optimized for GPUs. In 2025, it’s no longer a luxury—it’s standard in high-end gaming and VFX.

Ray Tracing in Practice

Basics: Rays are cast from the camera, bouncing off surfaces to compute colors. NVIDIA’s RTX series, like the 50-series, handles this efficiently with dedicated cores.

In gaming, ray tracing enhances immersion in titles like Cyberpunk 2077 remastered, but it demands powerful hardware—expect FPS drops without upscaling like DLSS. In film, it’s used for pre-rendered scenes, blending with rasterization for efficiency.

Tools: Implement with NVIDIA RTX in Unreal Engine. For beginners, start with simple path tracing in Blender.

Q&A: Is ray tracing worth it? Yes, for realism, but pair it with upscaling for performance.

Shader programming allows developers to write code that runs on the GPU, controlling how pixels and vertices are rendered. Languages like GLSL (OpenGL) and HLSL (DirectX) are staples.

Getting Started with Shaders

Write custom shaders for effects like glow or distortion. In Unity’s Shader Graph, create vertex shaders for transformations and fragment shaders for coloring.

GLSL vs. HLSL: GLSL is cross-platform, ideal for web and mobile; HLSL shines in Windows ecosystems.

Advanced: Use WGSL for WebGPU projects. Tutorials recommend starting with simple scanline effects.

Code Example (GLSL Fragment Shader):

Conclusion: Mastering Rendering for Future-Proof Projects

From GPU rendering’s speed to shader programming’s creativity, these techniques form the backbone of visual innovation in 2025. By integrating tools like ray tracing and real-time optimizations, you can create stunning, efficient graphics. Stay updated with SIGGRAPH conferences and experiment hands-on— the future of rendering is brighter (and faster) than ever. If you’re ready to upgrade, start with a top-tier GPU and dive into free tutorials today.