Whether you’re searching for “why are GPUs used for AI,” “what is a GPU,” or “why does AI need GPUs,” this in-depth article covers it all with practical advice, visuals, and data to help you understand and apply this knowledge.

Table of Contents

- What is a GPU? A Beginner’s Guide to Graphics Processing Units

- The Essential Role of GPUs in Modern AI Development

- How GPUs Power and Support AI Technologies

- Why AI Absolutely Needs GPUs: Speed, Scale, and Beyond

- Why Choose GPUs for AI? Unpacking the Tech Behind the Boom

- Best GPUs for AI in 2025: Recommendations and Comparisons

- Conclusion: The Future of GPUs in AI

What is a GPU? A Beginner’s Guide to Graphics Processing Units

If you’re new to computing, understanding “what is a GPU” is the foundation for grasping its role in AI. A Graphics Processing Unit (GPU) is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. Originally developed for rendering graphics in video games and animations, GPUs have evolved into powerful parallel processors.

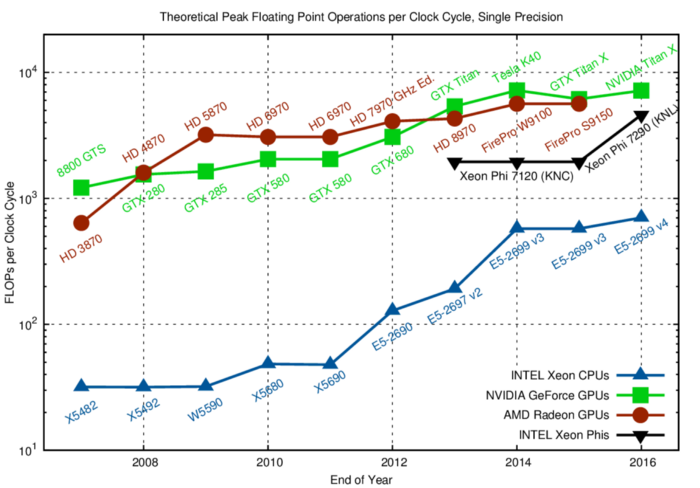

Unlike a Central Processing Unit (CPU), which handles general tasks sequentially with a few cores, a GPU features thousands of smaller cores optimized for handling multiple operations simultaneously. This parallel architecture makes GPUs ideal for data-intensive tasks.

To visualize this, consider a simple analogy: A CPU is like a master chef preparing one dish at a time, while a GPU is an army of line cooks working on hundreds of ingredients concurrently. This design originated in the 1970s with arcade games but exploded in the 1990s with 3D accelerators from companies like NVIDIA and ATI (now AMD).

Exploring the GPU Architecture

Key components of a GPU include:

- Cores: Thousands of Arithmetic Logic Units (ALUs) for parallel computations.

- Memory: High-bandwidth GDDR or HBM for fast data access.

- Shaders: Programmable units for graphics and general computing.

GPUs aren’t just for gaming anymore—they power everything from cryptocurrency mining to scientific simulations, and crucially, AI.

The Essential Role of GPUs in Modern AI Development

Diving into “why are GPUs used for AI,” it’s clear that GPUs play an indispensable role in modern AI development. AI models, especially deep learning neural networks, require processing massive datasets through billions of mathematical operations like matrix multiplications. GPUs excel here because their parallel processing handles these operations far more efficiently than CPUs.

In AI training, GPUs accelerate model iteration, reducing training times from weeks to hours. For instance, large language models (LLMs) like those powering ChatGPT rely on clusters of GPUs for training on terabytes of data.

Common AI use cases where GPUs shine:

- Computer Vision: Processing images for object detection.

- Natural Language Processing: Training models on text corpora.

- Generative AI: Creating images or text with tools like Stable Diffusion.

Without GPUs, AI innovation would stall due to computational bottlenecks.

How GPUs Power and Support AI Technologies

Exploring “what is GPU and why does it support AI,” we see that GPUs support AI through their architecture tailored for parallel workloads. GPUs perform technical calculations faster and more energy-efficiently than CPUs, delivering leading performance for AI training and inference.

Key ways GPUs support AI:

- Parallel Processing: Thousands of cores handle simultaneous operations, perfect for neural network layers.

- High Memory Bandwidth: Quick data transfer reduces latency in data-heavy AI tasks.

- Specialized Libraries: Frameworks like CUDA (NVIDIA) and ROCm (AMD) optimize AI code for GPUs.

GPU Architecture Explained: Structure, Layers & Performance

In practice, GPUs enable real-time AI applications, such as autonomous vehicles analyzing sensor data or healthcare AI processing medical images.

Why AI Absolutely Needs GPUs: Speed, Scale, and Beyond

Addressing “why does AI need GPU,” while AI can run on CPUs, GPUs are essential for speed and scalability in practical applications. AI workloads demand vast parallel computations, and GPUs can be 10-100 times faster than CPUs for machine learning tasks.

Reasons AI needs GPUs:

- Speed: Faster training allows quicker iterations and deployments.

- Scale: Handling large models with billions of parameters.

- Efficiency: Lower energy consumption for equivalent performance.

GPUs vs. CPUs: Understanding Why GPUs are Superior to CPUs for …

For example, training a model on a CPU might take days, but on a GPU, it’s hours—critical for industries like finance or research.

Why Choose GPUs for AI? Unpacking the Tech Behind the Boom

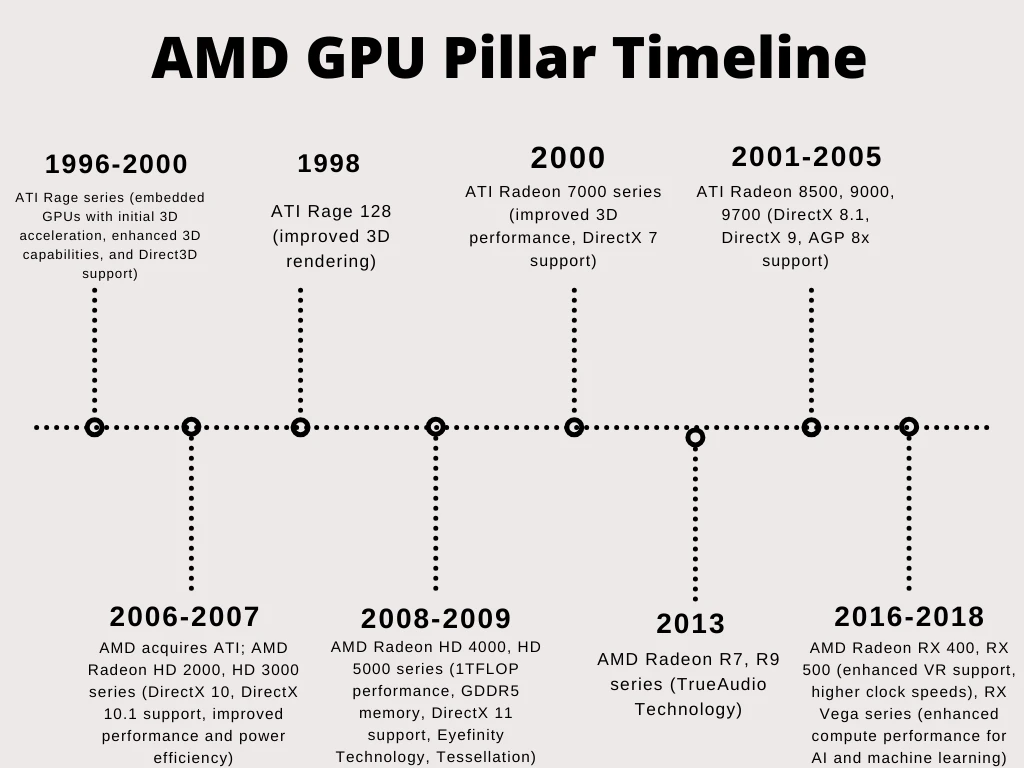

For “why GPUs for AI,” the choice boils down to their evolution from graphics to general-purpose computing. The history of GPUs in AI dates back to the 2000s, with NVIDIA’s CUDA in 2007 enabling non-graphics uses. A pivotal moment was in 2012 when AlexNet won ImageNet using GPUs, sparking the deep learning boom.

AMD GPU: History of Computer Graphics

Pros of choosing GPUs for AI:

- Versatility: Handles training, inference, and more.

- Ecosystem: Rich support from TensorFlow, PyTorch.

- Future-Proofing: Ongoing advancements like tensor cores.

Cons include high cost and power usage, but benefits outweigh for serious AI work.

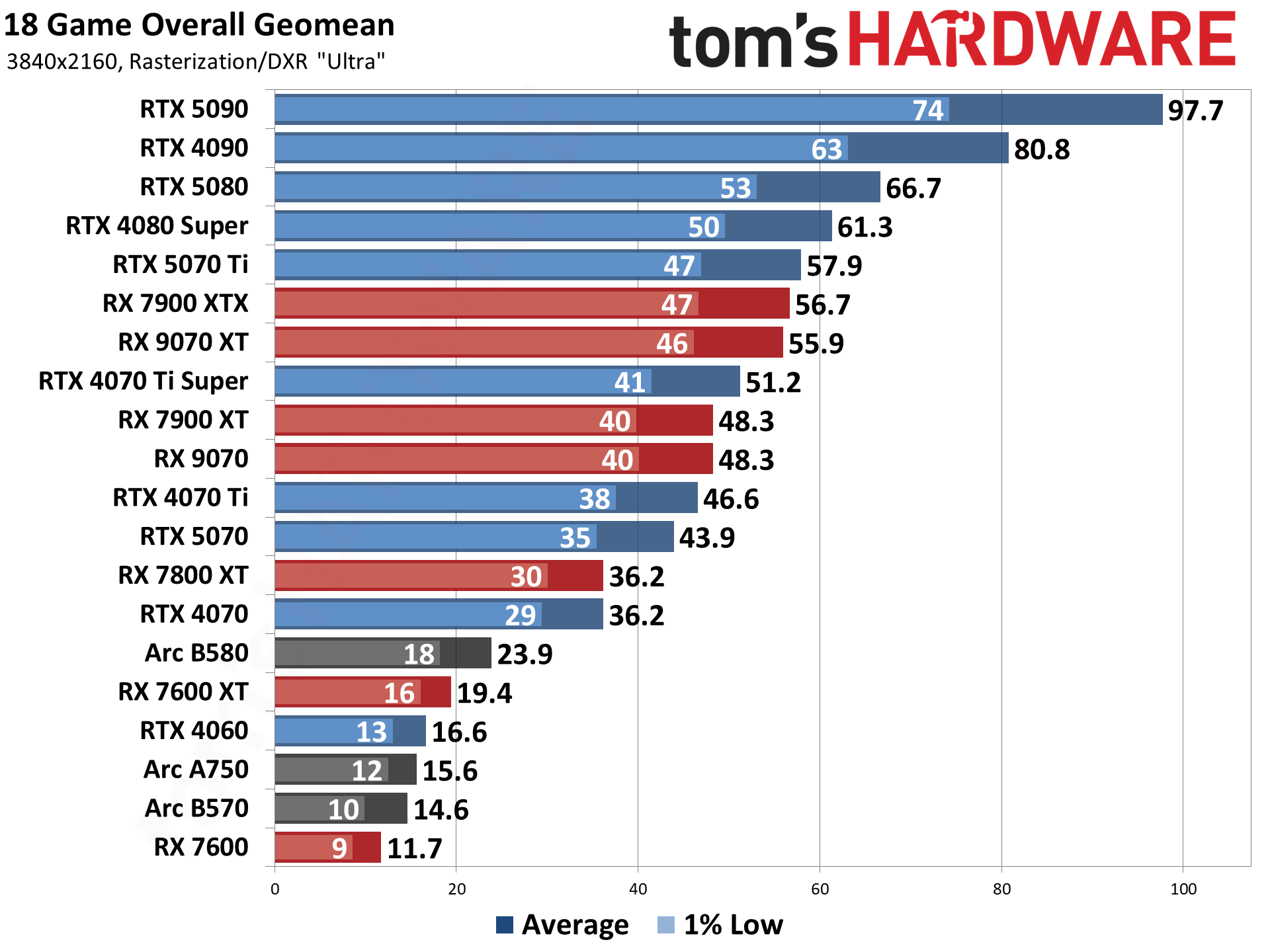

Best GPUs for AI in 2025: Recommendations and Comparisons

Based on 2025 trends, here are top GPUs for AI, selected for VRAM, performance, and compatibility.

| GPU Model | VRAM | Key Features | Best For | Price Range (USD) |

|---|---|---|---|---|

| NVIDIA RTX A6000 | 48GB | High precision, CUDA support | Enterprise AI training | $4,000+ |

| NVIDIA A100 | 80GB | Massive bandwidth, multi-instance GPU | Large-scale ML | $10,000+ |

| NVIDIA RTX 4090 | 24GB | Consumer-grade power, DLSS AI | Home AI projects | $1,500-2,000 |

| AMD Radeon RX 9070 XT | 16GB | High efficiency, ROCm | Cost-effective AI | $700-900 |

| NVIDIA H200 | Varies | Next-gen for LLMs | Data centers | Enterprise pricing |

Best Graphics Cards 2025 – Top Gaming GPUs for the Money | Tom’s …

These recommendations stem from benchmarks showing NVIDIA’s dominance in AI, but AMD offers value.

Conclusion: The Future of GPUs in AI

In 2025, GPUs remain the backbone of AI, driving innovations from generative models to edge computing. As AI demands grow, expect advancements like more efficient chips and integrated AI accelerators. For anyone entering AI, investing in a GPU is a smart move—start small and scale up.