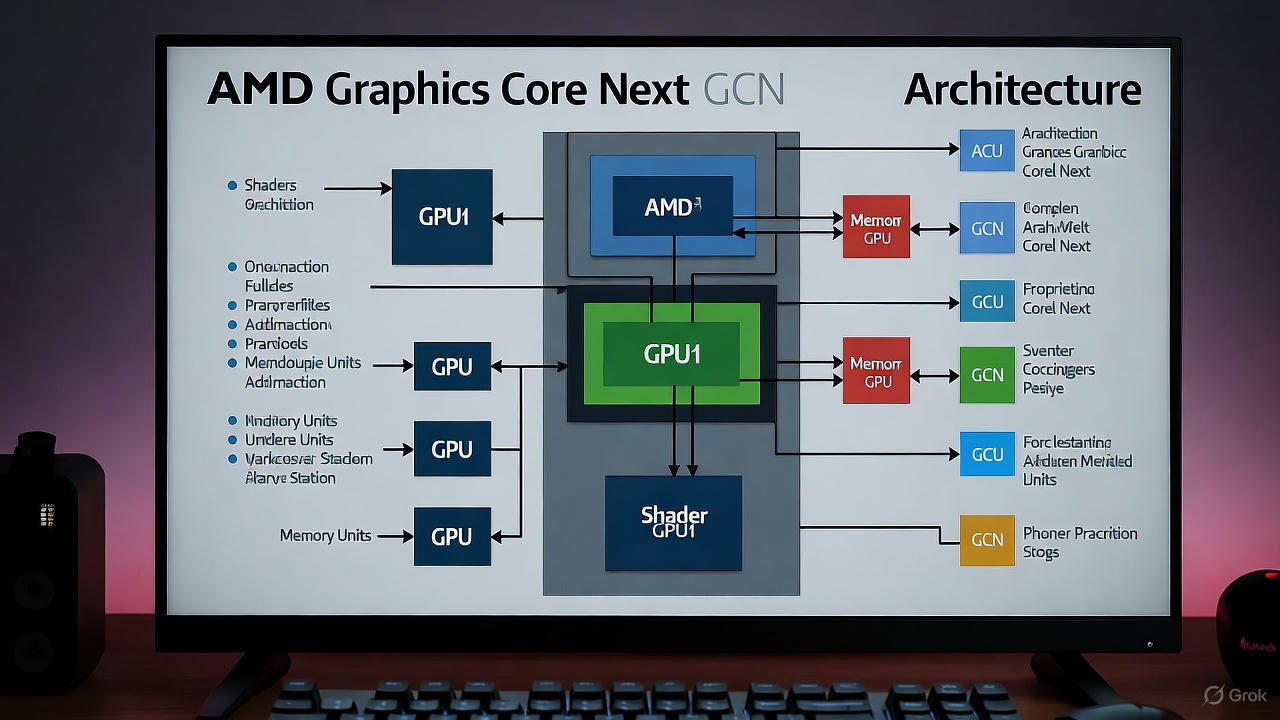

I’ve witnessed firsthand how GPU architectures evolve to meet the demands of gaming, computing, and AI. AMD’s Graphics Core Next (GCN) stands out as a pivotal milestone, launching in 2011 and powering generations of Radeon GPUs. Even in 2025, its legacy influences current designs like RDNA and the emerging UDNA. This comprehensive guide dives deep into AMD GCN, its evolution, technical intricacies, and why it remains relevant for gamers, developers, and hardware builders today. Whether you’re upgrading your rig or exploring GPU history, understanding GCN unlocks insights into AMD’s innovative path.

White Paper | AMD GRAPHICS CORES NEXT (GCN) ARCHITECTURE Table of …

What is AMD GCN? A Historical Overview

AMD GCN, short for Graphics Core Next, marked a fundamental shift in GPU design when it debuted with the Radeon HD 7970 in December 2011. This architecture replaced the older TeraScale, introducing a reduced instruction set (RISC) SIMD microarchitecture optimized for both graphics and general-purpose computing. GCN’s origins trace back to AMD’s need to compete in the compute market against NVIDIA’s Fermi, emphasizing predictable performance for parallel tasks.

From GCN 1.0 to 5.0 (Vega), the architecture evolved across five generations, powering cards like the HD 7000 series up to the RX Vega lineup. Its impact on gaming was profound, enabling features like asynchronous compute, which became standard in consoles such as the PS4 and Xbox One. In compute workloads, GCN’s unified shaders and coherent caching supported advancements in AI and scientific simulations.

By 2025, AMD has transitioned to RDNA for gaming and CDNA for data centers, with plans for a unified UDNA architecture. Yet, GCN’s principles live on, influencing efficiency in modern GPUs.

AMD Radeon HD 7970 Review | TechSpot

Understanding Graphics Core Next: Core Features and Innovations

Graphics Core Next isn’t just a name—it’s a blueprint for scalable GPU performance. At its heart are Compute Units (CUs), each containing 64 shaders grouped into wavefronts of 64 threads (unlike NVIDIA’s 32-thread warps). This design excels in hiding latency during complex operations, making it ideal for ray tracing precursors and compute shaders.

Key features include unified virtual memory for zero-copy data sharing between CPU and GPU, a hallmark of Heterogeneous System Architecture (HSA). GCN also introduced the Video Coding Engine (VCE) for hardware-accelerated video encoding, supporting H.264 and later HEVC (H.265). In consoles, GCN’s asynchronous compute queues allowed for efficient multitasking, boosting game performance.

The transition to Vega (GCN 5.0) added High Bandwidth Cache Controller (HBCC) and improved FP16 precision for AI tasks. Today, GCN’s relevance shines in emulation and legacy gaming, where its architecture supports Vulkan and DX12 flawlessly.

The Evolution of AMD GPU Architecture: From TeraScale to RDNA

AMD’s GPU lineage is a story of adaptation. Pre-GCN, TeraScale dominated with very long instruction word (VLIW) designs, strong in graphics but weak in compute. GCN flipped the script, introducing SIMD efficiency and scalability across generations:

- GCN 1.0 (2011-2013): Tahiti/Hawaii chips, focused on DX11.1 and compute.

- GCN 2.0-3.0 (2013-2015): Bonaire/Tonga, with better tessellation and power efficiency.

- GCN 4.0 (2016): Polaris, 14nm process for improved clocks.

- GCN 5.0 (2017): Vega, with HBM2 memory and enhanced ray tracing precursors.

Post-GCN, RDNA (2019) optimized for gaming with workgroup processors (WGPs), delivering up to 50% better perf/watt. By 2025, RDNA 4 features 3rd-gen ray tracing and AI accelerators, while UDNA unifies gaming and compute lines.

In benchmarks, GCN cards like the RX 580 hold value in 1080p gaming, but RDNA excels in 4K and ray-traced titles.

| Feature | GCN (e.g., Vega 64) | RDNA (e.g., RX 5700 XT) | Improvement in RDNA |

|---|---|---|---|

| Frequency (GHz) | 1.546 | 1.905 | +23% |

| Pixel Fill Rate (GPixels/s) | 98.94 | 121.92 | +23% |

| FP32 Performance (TFLOP/s) | 12.66 | 9.75 | -23% (but better efficiency) |

| L0 Bandwidth (TB/s) | 6.33 | 9.76 | +54% |

| Total Cache Bandwidth/FPLOP | 0.625 | 1.6 | +156% |

![A Comparison between the GCN Architecture [2] and the RDNA ...](https://www.researchgate.net/publication/363615984/figure/fig1/AS:11431281084819269@1663415125663/A-Comparison-between-the-GCN-Architecture-2-and-the-RDNA-Architecture-3.png)

A Comparison between the GCN Architecture [2] and the RDNA …

Deep Dive into GCN Microarchitecture: Technical Breakdown

GCN’s microarchitecture is a masterclass in parallelism. Each Compute Unit includes four SIMD-16 vector units, a scalar unit, and 64KB vector registers. Texture filtering units (4 per CU) and load/store units (16) enable high-throughput data handling.

For overclockers, GCN’s PowerTune allows dynamic clock adjustments, though efficiency issues in early gens led to high power draw. Vulnerabilities like poor FP64 ratios were addressed in later iterations, boosting compute for scientific apps.

In 2025, GCN’s open-source support via ROCm and RADV enables ray tracing on older cards, extending their life in Linux gaming.

![A Comparison between the GCN Architecture [2] and the RDNA ...](https://www.researchgate.net/publication/363615984/figure/fig1/AS:11431281084819269@1663415125663/A-Comparison-between-the-GCN-Architecture-2-and-the-RDNA-Architecture-3.png)

A Comparison between the GCN Architecture [2] and the RDNA …

AMD Radeon GCN Series: Popular Cards, Performance, and 2025 Value

The AMD Radeon GCN lineup spans budget to high-end. Flagships like the RX Vega 64 (4096 shaders, 8GB HBM2) targeted 4K gaming with 483.8 GB/s bandwidth. Mid-range hits like the RX 580 remain popular for 1080p/1440p in the second-hand market.

In modern tests, a Vega 64 handles esports at high frames but struggles with ray tracing compared to RDNA 3/4. For retro gamers, GCN cards excel in emulation due to strong Vulkan support.

Upgrading? Pair a GCN card with Ryzen for budget builds, but for 2025 AI workloads, consider RDNA’s enhancements.

AMD Radeon RX Vega 64 and RX Vega 56 Official Details Announced

GCN’s Legacy in 2025: Impact on Gaming and Compute

By 2021, AMD moved early GCN gens to legacy support, focusing on Polaris onward. Yet, its design principles endure in CDNA for enterprise compute. In gaming, GCN’s async compute paved the way for DX12 features, influencing current consoles.

For compute, GCN’s wavefronts favor long-running kernels, ideal for AI before dedicated accelerators. In 2025, with RDNA 4’s 40% uplift, GCN serves budget users while UDNA promises unified efficiency.

Conclusion: Why AMD GCN Still Matters

AMD GCN transformed GPUs from graphics specialists to versatile compute engines. Its evolution laid the groundwork for today’s RDNA and beyond, proving AMD’s foresight. If you’re building a PC or modding old hardware, GCN offers timeless value—affordable, capable, and enduring.

Frequently Asked Questions (FAQs)

What is the difference between AMD GCN and RDNA?

GCN focuses on compute scalability, while RDNA prioritizes gaming efficiency with higher clocks and better perf/watt.

Is AMD GCN still supported in 2025?

Early gens are legacy, but Polaris and Vega receive limited updates. Open-source drivers extend usability.

Which games run best on GCN GPUs?

DX11/Vulkan titles like older AAA games; async compute shines in multi-threaded engines.

Can GCN handle ray tracing?

Via software emulation, but hardware support is limited compared to RDNA’s dedicated units.

What’s next after GCN/RDNA?

AMD’s UDNA unifies architectures for flexible gaming and data center use by late 2025.