I’ve crafted this comprehensive guide to help you navigate the NVIDIA V100 GPU landscape. Whether you’re searching for “NVIDIA V100 current price,” “NVIDIA V100 cost,” or “V100 GPU price,” this article draws on the latest market data, benchmarks, and expert insights to provide value. Last updated: November 13, 2025. We’ll explore specs, pricing trends, total cost of ownership (TCO), and alternatives to ensure you make an informed decision for AI, ML, or HPC workloads.

The NVIDIA Tesla V100, launched in 2017 on the Volta architecture, remains a powerhouse for legacy applications despite newer models like the A100 and H100. With 5,120 CUDA cores, up to 32GB HBM2 memory, and Tensor Cores for accelerated deep learning, it’s ideal for data scientists and enterprises. But in 2025, its availability is mostly on the secondary market, making price and cost analysis crucial.

Amazon.com: PNY Nvidia Tesla v100 16GB : Electronics

NVIDIA V100 Price Guide: Current Market Prices and Buying Tips

If you’re a tech enthusiast, data scientist, or IT buyer eyeing the “NVIDIA V100 price,” expect variability based on condition, memory size (16GB or 32GB), and form factor (PCIe or SXM2). As of November 2025, new units are rare since NVIDIA has phased out production, but refurbished and used options abound.

Current Market Prices (New vs. Used)

- New/Enterprise Listings: Rare, but enterprise resellers like Cisco quote around $36,000 for PCIe 16GB models in bulk deals. However, these are often for legacy systems and not practical for individual buyers.

- Used/Refurbished Prices: On platforms like eBay and Newegg, 16GB models range from $365 to $700, while 32GB variants go for $950 to $2,378. Average eBay listings show 16GB units at ~$400 and 32GB at ~$1,200, down from 2024 highs due to oversupply from data centers upgrading to Hopper GPUs.

- Cloud Rental Prices: For on-demand access, AWS EC2 P3 instances (with V100) start at ~$3.06/hour for p3.2xlarge (1 GPU), scaling to $24.48/hour for p3.16xlarge (8 GPUs). Spot instances can drop to $0.90/hour, making it cost-effective for short-term use. Other providers like Google Cloud offer similar V100 instances at $2.48/hour.

Factors influencing the NVIDIA V100 current price include global chip shortages (easing in 2025), demand for AI training, and competition from consumer GPUs like RTX 40-series repurposed for ML. Supply is steady from decommissioned servers, keeping prices affordable.

Where to Buy NVIDIA V100

- Recommended Vendors: eBay for budget used units (check seller ratings and warranties); Amazon for refurbished with Prime shipping; Newegg or specialized HPC resellers like Microway for certified refurbs.

- Affiliate Tip: Consider buying through affiliate links on tech sites for deals – for example, Amazon NVIDIA V100 listings often include free delivery.

- Budget Tips: Opt for 16GB if your workloads are lighter; test for defects using NVIDIA’s diagnostic tools. Always factor in shipping (~$30-50) and potential customs for international buys.

In 2025 and beyond, expect prices to stabilize or dip further as Blackwell GPUs enter the market, but the V100’s value holds for cost-conscious users.

Breaking Down the True Cost of NVIDIA V100: Hardware, Maintenance, and ROI

While “NVIDIA V100 price” covers the upfront tag, “NVIDIA V100 cost” emphasizes total cost of ownership (TCO) – including hardware, operations, and long-term value. This section targets enterprise users, AI researchers, and budget planners focusing on “NVIDIA V100 operating cost.”

Overview of Base Cost

The base hardware cost aligns with the prices above: $400-2,500 for used units. But TCO balloons with peripherals – a compatible server chassis adds $500-1,000, and NVLink bridges for multi-GPU setups cost ~$100 each.

Hidden Costs: Power, Cooling, and Setup

- Power Consumption: At 250W TDP, a single V100 draws ~$200-300/year in electricity (assuming $0.15/kWh and 24/7 use). For an 8-GPU server, that’s $1,600-2,400 annually.

- Cooling and Maintenance: Data center cooling adds 20-30% to power costs; expect $500/year per unit for enterprise setups. Setup involves compatible motherboards (e.g., Supermicro with PCIe 3.0), potentially $1,000+.

- Other Expenses: Software licensing (CUDA toolkit is free, but enterprise support via NVIDIA AI Enterprise is $4,500/year per GPU), and potential failures (1-2% annual rate) could add $100-500 in repairs.

A 2019 analysis showed an 8-V100 on-prem server TCO at ~$69,441 less than AWS p3 over 3 years, but in 2025, cloud options are more efficient for sporadic use.

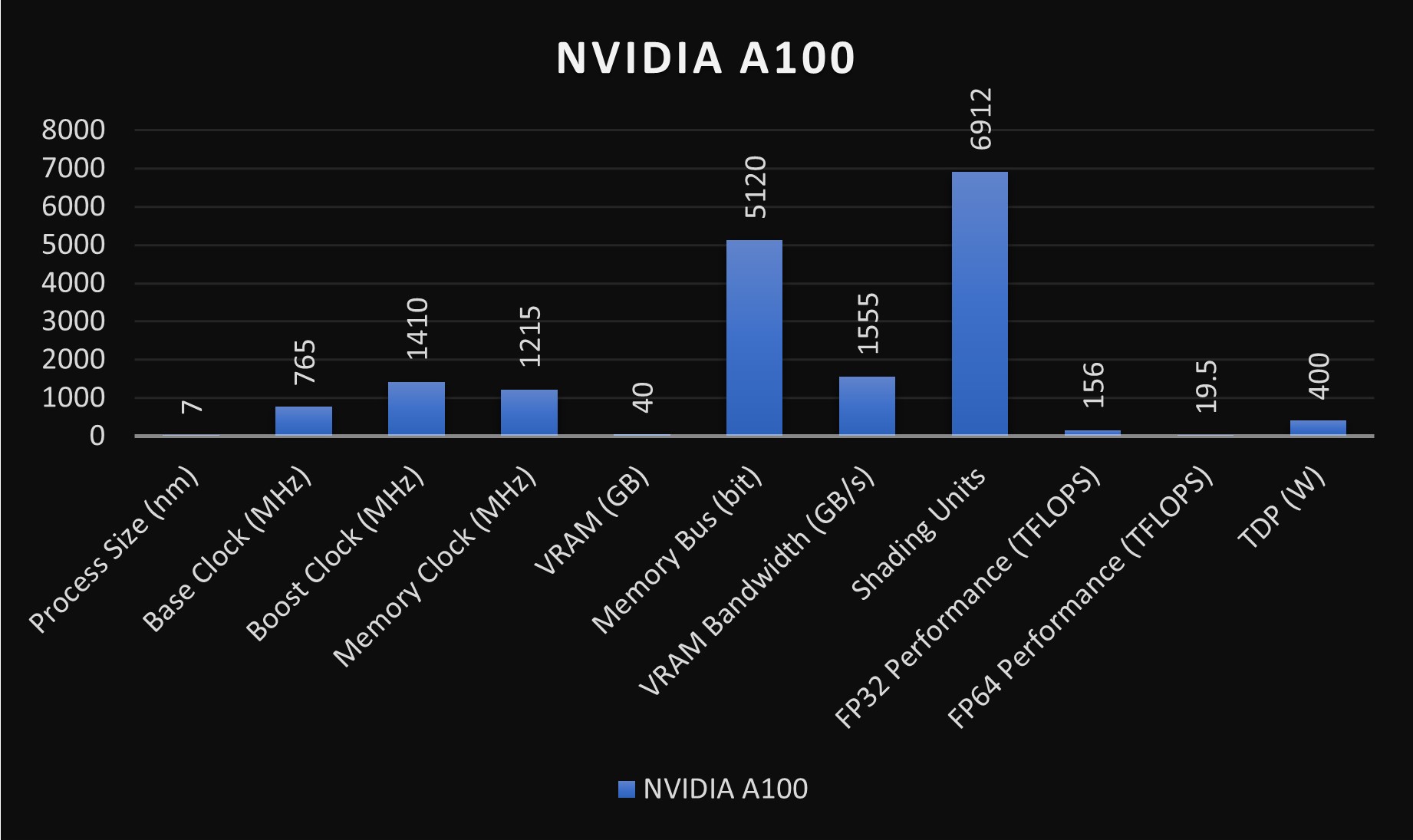

NVIDIA A100 vs. V100: In-Depth GPU Comparison

Comparison with Newer GPUs (A100/H100)

- V100 vs. A100: A100 offers 2-3x faster training (e.g., 60-70% speedup in PyTorch mixed precision). A100 TCO is lower long-term due to efficiency, but upfront ~$10,000-15,000.

- V100 vs. H100: H100 is 4-6x faster for transformers, with 700W TDP but better energy efficiency. For ROI, V100 suits legacy code; H100 excels in modern LLMs.

| GPU Model | Base Price (2025 Used) | TDP (W) | FP32 Performance (TFLOPS) | TCO Estimate (3 Years, Single Unit) |

|---|---|---|---|---|

| V100 | $400-2,500 | 250 | 14 | $2,000-4,000 (incl. power/maintenance) |

| A100 | $5,000-10,000 | 400 | 19.5 | $3,500-6,000 |

| H100 | $20,000+ | 700 | 60 | $5,000-8,000 (higher efficiency offsets) |

Case Studies on ROI

- AI Research Lab: A university deployed V100s for ML training, achieving ROI in 18 months via faster iterations vs. CPUs.

- Enterprise Savings: Switching to cloud V100 reduced on-prem TCO by 40% for burst workloads.

Alternatives for Cost Savings

Consider RTX 3090 (~$1,500, similar FP32 but consumer-grade) or cloud services for zero upfront cost. For TCO optimization, hybrid setups (on-prem + cloud) minimize “NVIDIA V100 operating cost.”

V100 GPU Price Trends: Affordable Options for AI Workloads in 2025

For hobbyists, small developers, and resellers querying “V100 GPU price,” trends show a downward trajectory, making it accessible for entry-level AI.

Historical Price Evolution

Launched at ~$10,000 in 2018, prices dropped to $7,000 by 2020 and ~$1,000 by 2023. In 2025, expect 20-30% further decline as A100/H100 surplus floods the market.

NVIDIA V100? Is it a decent card? : r/homelab

Current Pricing Across Platforms

- eBay/AWS: Used 32GB ~$950; AWS rentals ~$3/hour.

- Other: AliExpress for international (~$300-800, but risky); resellers like UnixSurplus at $950.

Benchmarks vs. Competitors

V100 scores ~14 TFLOPS FP32, lagging A100 (19.5) and H100 (60), but beats consumer cards for Tensor ops.

Research Software Engineering Sheffield – Benchmarking FLAME GPU 2 …

Pros of V100:

- Affordable entry to pro-grade AI.

- Strong for Volta-optimized code.

Cons:

- Higher power draw vs. newer efficiency.

- Limited future-proofing.

Buying Guide for Second-Hand Units

Inspect for artifacts; use tools like GPU-Z. Future predictions: Prices may hit $200-500 by 2026.

Conclusion: Is the NVIDIA V100 Worth It in 2025?

For “NVIDIA V100 price” seekers, it’s a bargain for specific needs, but weigh TCO against alternatives. Consult experts for tailored advice.