General-purpose humanoid robots are built to adapt quickly to existing human-centric urban and industrial work spaces, tackling tedious, repetitive, or physically demanding tasks. These mobile robots are designed to naturally excel in human-centric environments, making them increasingly valuable from the factory floor to healthcare facilities.

Imitation learning, a subset of robot learning, enables humanoids to acquire new skills by observing and mimicking expert human demonstrations. Collecting these extensive, high-quality datasets in the real-world is tedious, time consuming and prohibitively expensive. Synthetic data generated from physically accurate simulated environments can accelerate the gathering process.

NVIDIA Isaac GR00T helps tackle these challenges, providing humanoid robot developers with robot foundation models, data pipelines and simulation frameworks. The NVIDIA Isaac GR00T Blueprint for Synthetic Motion Generation is a simulation workflow for imitation learning enabling you to generate exponentially large datasets from a small number of human demonstrations.

In this post, we describe how to capture teleoperated data from Apple Vision Pro, generate large synthetic trajectory data sets from just a few human demonstrations using NVIDIA Isaac GR00T, and then train a robot motion policy model in Isaac Lab.

Synthetic motion generation

Key components of the workflow include the following:

- GR00T-Teleop:

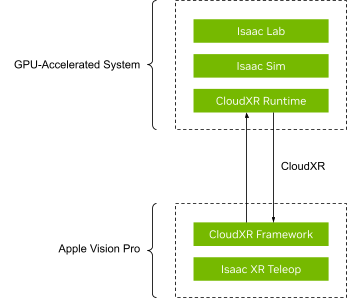

- NVIDIA CloudXR: Connect to an Apple Vision Pro headset to stream actions using a custom CloudXR runtime specifically designed for humanoid teleoperation.

- Isaac XR Teleop: Stream teleoperation data to and from NVIDIA Isaac Sim or Isaac Lab with this reference application for Apple Vision Pro.

- Isaac Lab: Train robot policies with an open-source unified framework for robot learning. Isaac Lab is built on top of NVIDIA Isaac Sim.

- GR00T-Mimic: Generate vast amounts of synthetic motion trajectory data from a handful of human demonstrations.

- GR00T-Gen: Add additional diversity by randomizing background, lighting, and other variables in the scene and upscale the generated images through NVIDIA Cosmos. (We are not covering GROOT-Gen in detail in this post.)

The synthetic motion generation pipeline is a sophisticated process designed to create a large and diverse dataset for training robots.

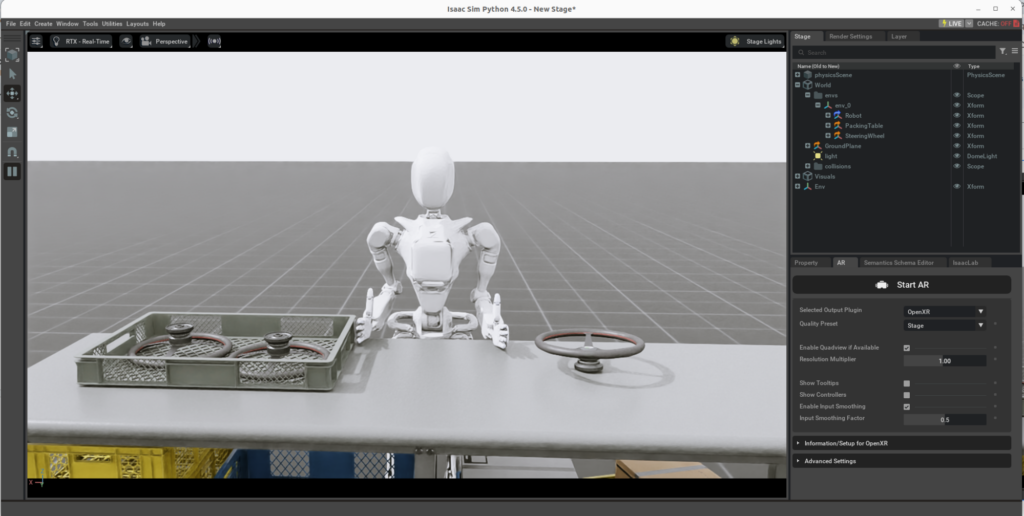

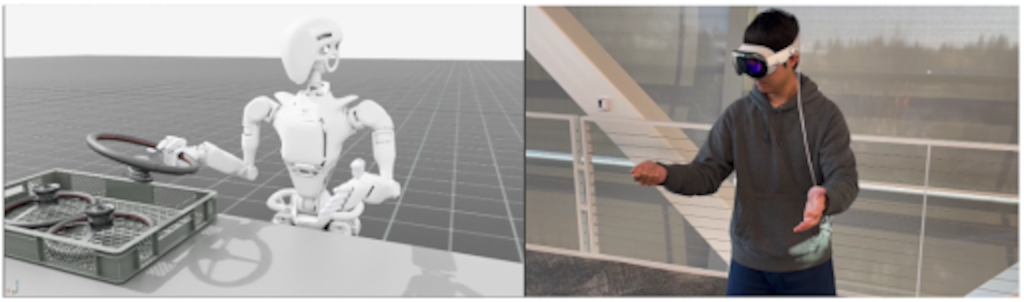

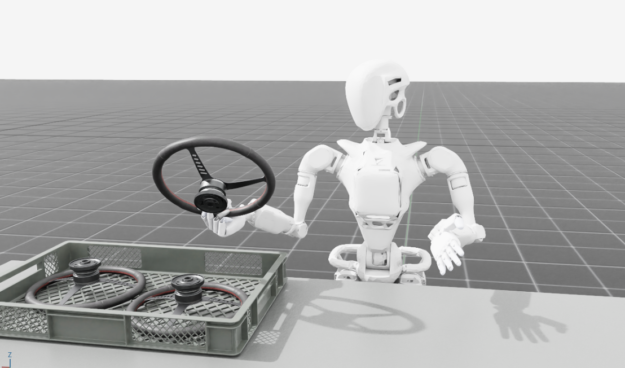

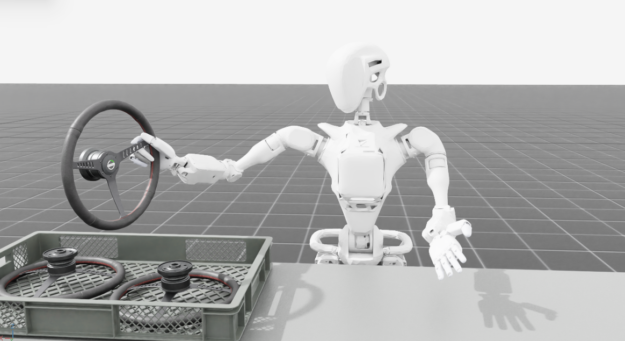

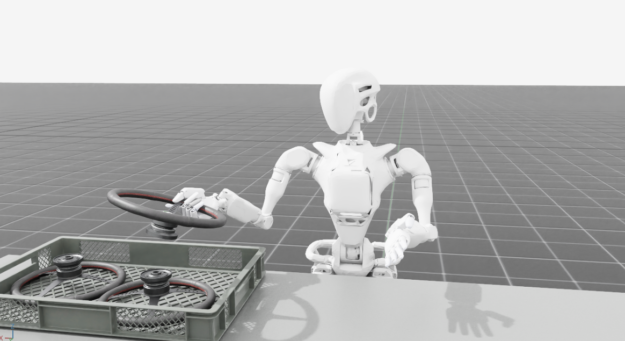

It begins with data collection, where a high-fidelity device like the Apple Vision Pro is used to capture human movements and actions in a simulated environment. The Apple Vision Pro streams hand tracking data to a simulation platform such as Isaac Lab, which simultaneously streams an immersive view of the robot’s environment back to the device. This setup enables the intuitive and interactive control of the robot, facilitating the collection of high-quality teleoperation data.

The robot simulation in Isaac Lab is streamed to Apple Vision Pro, enabling you to visualize the robot’s environment. By moving your hands, you can intuitively control the robot to perform various tasks. This setup facilitates an immersive and interactive teleoperation experience.

Synthetic trajectory generation using GR00T-Mimic

After the data is collected, the next step is synthetic trajectory generation. Isaac GR00T-Mimic is used to extrapolate from a small set of human demonstrations to create a vast number of synthetic motion trajectories.

This process involves annotating key points in the demonstrations and using interpolation to ensure that the synthetic trajectories are smooth and contextually appropriate. The generated data is then evaluated and refined to meet the criteria required for training.

In this example, we successfully generated 1K synthetic trajectories.

Training in Isaac Lab using imitation learning

Finally, the synthetic dataset is used to train the robot using imitation learning techniques. In this stage, a policy, such as a recurrent Gaussian mixture model (GMM) from the Robomimic suite, is trained to mimic the actions demonstrated in the synthetic data.

The training is conducted in a simulation environment such as Isaac Lab, and the performance of the trained policy is evaluated through multiple trials. This pipeline significantly reduces the time and resources needed to develop and deploy robotic systems, making it a valuable tool in the field of robotics.

To show how this data can be used, we trained a Franka robot with a gripper to perform a stacking task in Isaac Lab. The gripper is something similar to what you’d find on a humanoid robot.

We used Behavioral Cloning with a recurrent GMM policy from the Robomimic suite. The policy uses two long short-term memory (LSTM) layers with a hidden dimension of 400.

The input to the network consists of the robot’s end-effector pose, gripper state, and relative object poses while the output is a delta pose action used to step the robot in the Isaac Lab environment.

With a dataset consisting of 1K successful demonstrations and 2K iterations, we achieved a training speed of approximately 50 iterations/sec (equivalent to approximately 0.5 hours of training time on the NVIDIA RTX 4090 GPU). Averaging over 50 trials, the trained policy achieved an 84% success rate for the stacking task.

Get started

In this post, we discussed fast-track humanoid motion policy learning through NVIDIA Isaac GR00T by generating synthetically generated trajectory data.

The GR00T-Teleop stack is in invite-only early access. Join the Humanoid Developer Program for when the stack becomes available in beta.

Watch the CES 2025 keynote from NVIDIA CEO Jensen Huang and stay up to date by subscribing to our newsletter and following NVIDIA Robotics on YouTube, Discord, and our developer forums.