I was having issues with the Applications like NetKET to detect and enable MPI.

Diagnosis

- I have installed OpenMPI and enabled CUDA during the configuration.

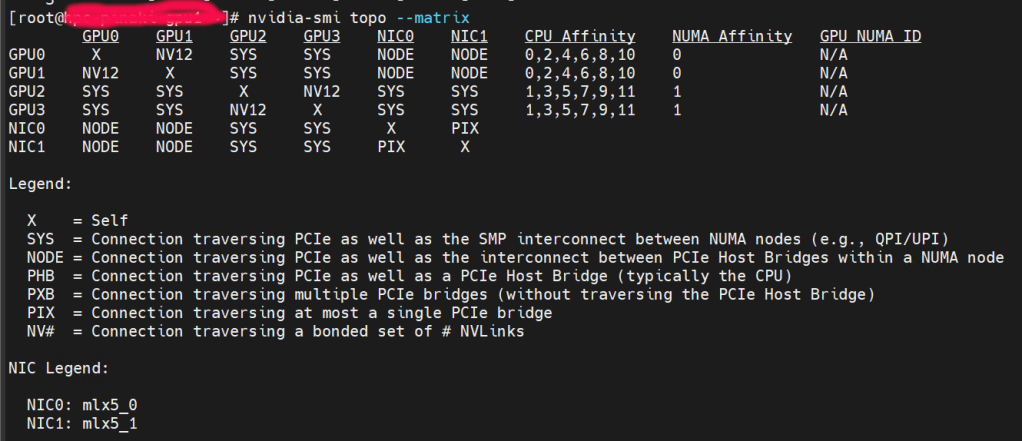

- CUDA Libraries including nvidia-smi has been installed without issue. But running, nvidia-smi topo –matrix, I am not able to see NVLink similar to

In fact, when I run NetKet on CUDA with MPI, the error that was generated was

mpirun noticed that process rank 0 with PID 0 on node gpu1 exited on signal 11 (Segmentation fault)."Solution

This forum entry provided some enlightenment. https://forums.developer.nvidia.com/t/cuda-initialization-error-on-8x-a100-gpu-hgx-server/250936

The solution was to disable the Multi-instance GPU Mode which is enabled by default. Reboot the Server and it should see

nvidia-smi -mig 0

Enabling Persistence Mode

Make sure the configuration stays after a reboot.

# systemctl enable nvidia-persistenced.service

# systemctl start nvidia-persistenced.service