NVIDIA 6G Developer Day 2024 brought together members of the 6G research and development community to share insights and learn new ways of engaging with NVIDIA 6G research tools. More than 1,300 academic and industry researchers from across the world attended the virtual event. It featured presentations from NVIDIA, ETH Zürich, Keysight, Northeastern University, Samsung, Softbank, and University of Oulu. This post explores five key takeaways from the event.

1. 6G will be AI-native and implement AI-RAN

It’s expected that 6G will ride the AI wave to unlock new potential for both consumers and enterprises, and transform the telecommunications infrastructure. This was the key message of the keynote presented by NVIDIA SVP Ronnie Vasishta. With the rapidly growing adoption of generative AI and AI applications, AI-enhanced endpoints are interacting and making decisions on the move, creating huge volumes of voice, video, data, and AI traffic on the telecommunications network.

The emergence of AI traffic, generated from AI applications at the edge, and requiring differing levels of cost economics, energy economics, latency, reliability, security and data sovereignty, provides new opportunities and challenges for the telecommunications infrastructure. This requires the underlying infrastructure to be designed and built to be AI-native, natively leveraging AI capabilities and supporting AI traffic.

The keynote unveiled the strategic and technical drivers for an AI-native 6G infrastructure. Telcos want to maximize infrastructure efficiency with higher spectral efficiency, throughput, and capacity. Equally, telcos seek to maximize return on investment with better monetization, agility to introduce new features, and support for growth of traffic and new services on the RAN.

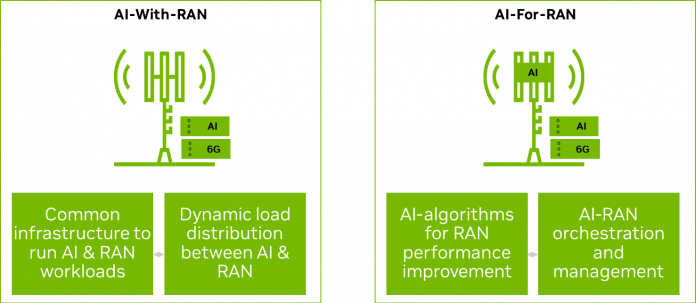

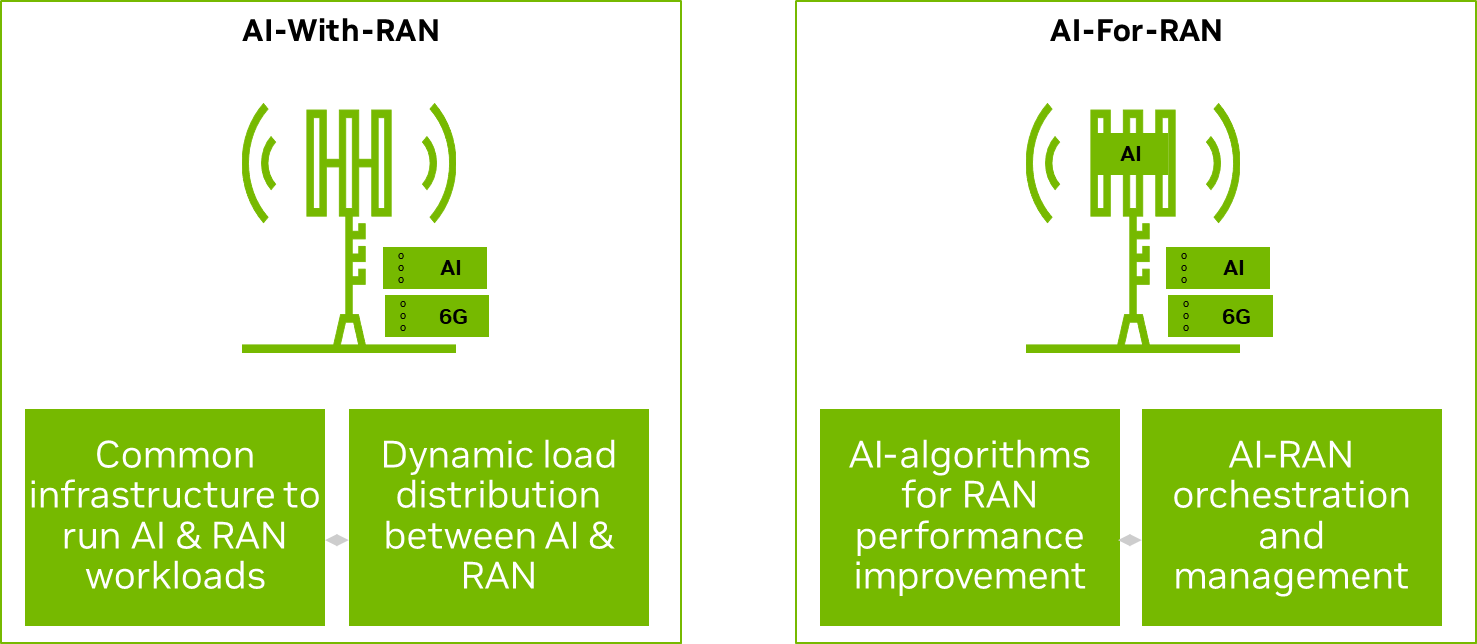

AI-RAN offers a pathway to realize the benefits of the AI-native infrastructure with an AI-With-RAN and an AI-For-RAN implementation (Figure 1). AI-With-RAN encompasses what the AI-RAN Alliance describes as AI-on-RAN and AI-and-RAN. It enables telcos to move from single purpose to multipurpose networks that can dynamically run AI and RAN workloads on a software-defined, unified, and accelerated infrastructure.

With AI-For-RAN, RAN-specific AI algorithms are deployed on the same infrastructure to drive RAN performance and improvement. AI-RAN will revolutionize the telecommunications industry, enabling telcos to unlock new revenue streams, and deliver enhanced experiences through generative AI, robotics, and automation tools.

2. AI-RAN models the three computer problems of AI for the physical world

AI-RAN is the technology framework to build the AI-native 6G and is a good model of how AI is integral to developing, simulating, and deploying solutions for the physical world. This aligns with the classic three computer problems:

- Creating AI models using huge amounts of data

- Testing and improving network behavior with large-scale simulations, especially for site-specific data

- Deploying and operating a live network

For 6G, this means creating and developing AI models for 6G; simulating, modeling, and improving an AI-native 6G; and deploying and operating an AI-native 6G.

The session on Inventing 6G with NVIDIA AI Aerial Platform introduced NVIDIA AI Aerial as a platform for implementing AI-RAN and with three components for addressing the three computer problems:

NVIDIA AI Aerial provides a set of tools for algorithm development, system-level integration and benchmarking, as well as production-level integration and benchmarking. Introduced for 5G, these tools cover 5G-advanced and lead the path towards 6G.

3. GPU-based accelerated computing is best suited for deploying 6G

6G AI-RAN will continue the trend from 5G towards a software-defined, high-performance RAN running on COTS infrastructure. It will also be fully AI-native, O-RAN based, with hardware/software disaggregation, and multipurpose in nature to support both AI and RAN workloads. With these, it is increasingly clear that new approaches will be needed for the computer where the 6G AI-RAN is deployed to realize new opportunities for telcos and handle vRAN baseband challenges.

Among all the competing solutions to match these industry requirements, there are three key reasons GPU acceleration is the best computer platform for deploying 6G, as explained in the session on CUDA/GPU System for Low-Latency RAN Compute. Specifically, GPUs:

- Deliver very high throughput to handle heavy traffic. This is possible because, thanks to parallel computing, the GPU excels at managing multiple data streams simultaneously, better utilization of multiple Physical Resource Blocks (PRBs) and handling complex algorithms for tasks such as beamforming.

- Run low-latency and real-time critical workloads efficiently. GPU SIMT (single instruction, multiple threads) architecture is optimized for linear algebra operations. With additional CUDA features, this enables a software-defined digital signal processing compute machine to run physical layer workloads efficiently.

- Are ideal for the multipurpose platform for AI and RAN, thanks to its well-established suitability for AI workloads. This makes it significantly better at delivering a profitable AI-RAN and provides a platform that offers a sustainable Gbps/watt energy efficiency. For more details from the Softbank announcement in November 2024, see AI-RAN Goes Live and Unlocks a New AI Opportunity for Telcos.

NVIDIA Aerial CUDA Accelerated RAN running on the Aerial RAN Computer-1 offers a high-performance and scalable GPU-based solution for the 6G AI-RAN. It includes a set of software-defined RAN libraries (cuPHY, cuMAC, pyAerial) optimized to run on multiple GPU-accelerated computing configurations.

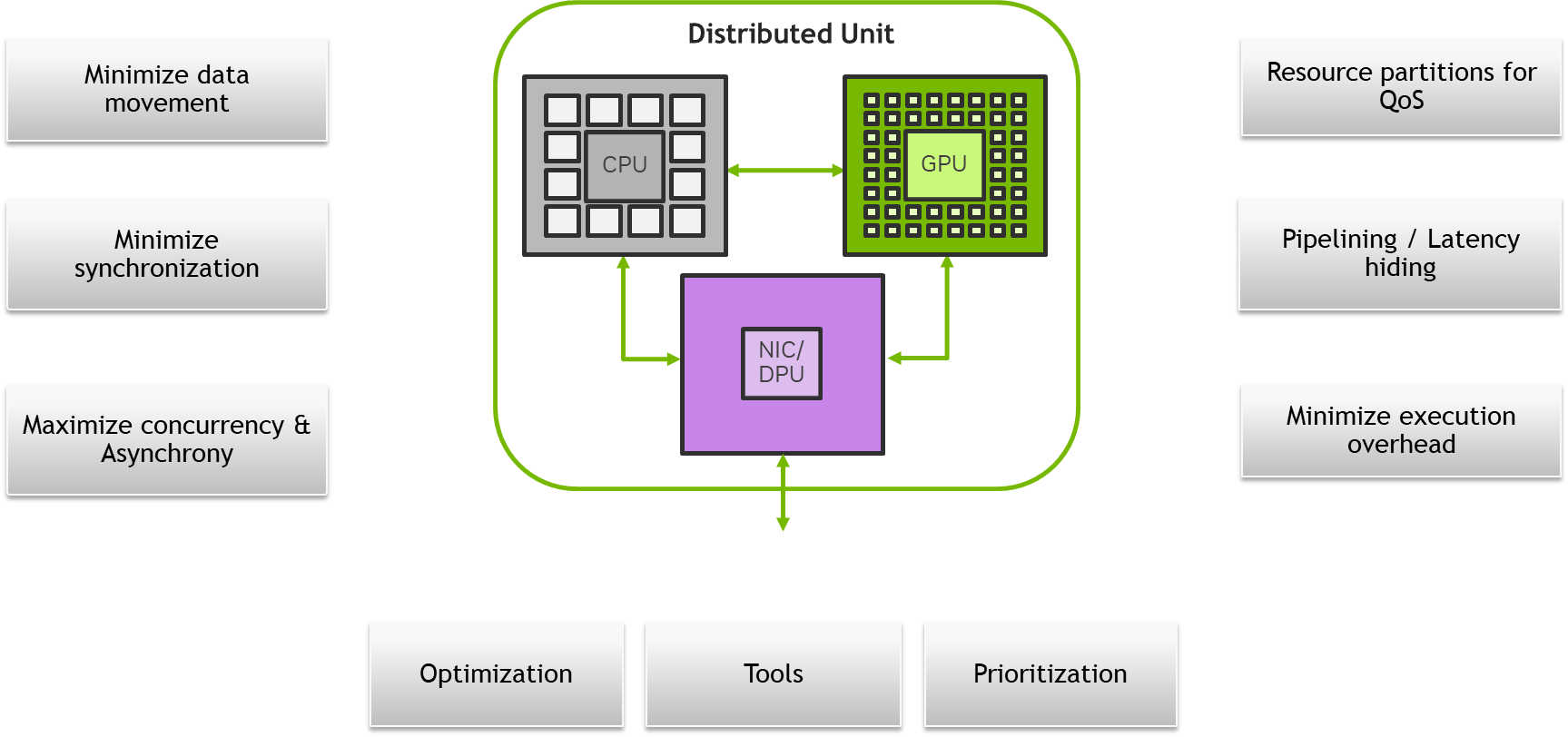

Figure 3 shows the different strategies required to scale such a system from low density to medium density and high density configurations and across CPU, GPU, and NIC subsystems. It highlights strategies to minimize data movement, synchronization and execution overhead; maximize concurrency and asynchrony; tools for optimization, pipelining, prioritization, and resource partitions for QoS.

4. Digital twins will be an integral part of 6G AI-RAN

At NVIDIA GTC 2024, NVIDIA CEO Jensen Huang said in a keynote that “we believe that everything manufactured will have digital twins.” This is increasingly a reality across many industrial sectors. For telecommunications, 6G will be the first cellular technology generation to be first created and simulated as a digital twin. This will create a continuum among the design, deployment, and operations phases for 6G RAN products.

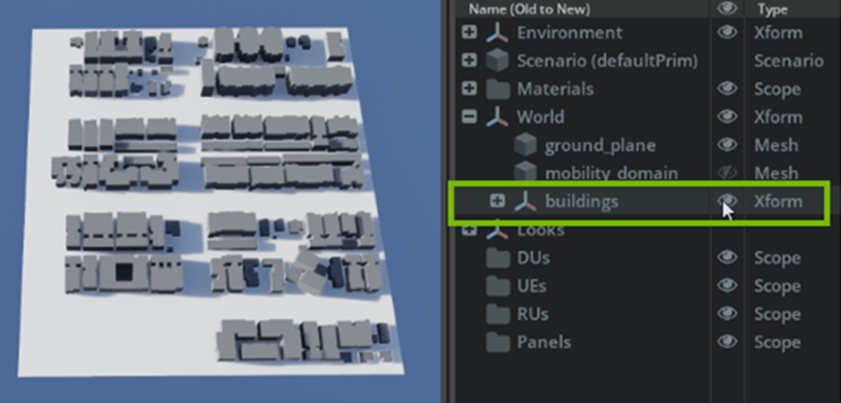

The session on Building a RAN Digital Twin explored how to build a RAN digital twin using NVIDIA Aerial Omniverse Digital Twin (AODT) and how this enables new AI techniques and algorithms for 6G (Figure 4). AODT is a next-generation, system-level simulation platform for 5G/6G. It is based on, and benefits from the richness of, the NVIDIA Omniverse platform. In addition, Keysight showcased how they are using AODT as part of their RF Raytracing Digital Twin Solution in the session, 6G Developer Spotlight Session 1.

Both sessions showcased how aspects of the physical world (including 5G/6G RAN, user devices, and radio frequency signals) and the digital twin world (including the electromagnetic engine, mobility model for user devices, geospatial data, antenna data, and channel emulator) are combined to create and simulate a RAN digital twin. In doing so, the RAN digital twin becomes a tool to benchmark system performance and explore machine learning-based wireless communication algorithms in real-world conditions.

5. The industry needs platforms for AI training for 6G AI-RAN

As AI becomes integral to 6G design and development, the need for training platforms and testbeds is important for the industry. Such a platform and testbed provides an opportunity to take 6G AI/ML from simulation to reality. Ongoing research areas for native AI include waveform learning, MAC acceleration, site-specific optimizations, beamforming, spectrum sensing, and semantic communication.

The session on AI and Radio Frameworks for 6G explored how AI and radio frameworks can be used for 6G R&D with NVIDIA Aerial AI Radio Frameworks and its tools pyAerial, Aerial Data Lake, and NVIDIA Sionna.

- pyAerial is a Python library of physical layer components that can be used as part of the workflow in taking a design from simulation to real-time operation. It provides end-to-end verification of a neural network integration into a physical layer pipeline and helps bridge the gap from the world of training and simulation in TensorFlow and PyTorch to real-time operation in an over-the-air testbed.

- Aerial Data Lake is a data capture platform supporting the capture of OTA radio frequency (RF) data from vRAN networks built on the Aerial CUDA-Accelerated RAN. It consists of a data capture application running on the base station distributed unit, a database of samples collected by the app, and an API for accessing the database.

- Sionna is a GPU-accelerated open-source library for link-level simulations. It enables rapid prototyping of complex communication system architectures and provides native support for the integration of machine learning in 6G signal processing.

The session on Setting Up 6G Research Testbeds explored how to set up a 6G research testbed to accelerate innovation, drive standardization, and provide real-world testing and performance benchmarking with the NVIDIA Aerial RAN CoLab (ARC-OTA).

In the 6G Developer Spotlight Session 1 and 6G Developer Spotlight Session 2, Softbank, Samsung, University of Oulu, Northeastern University (NEU), and ETH Zurich showcased how they are working with the NVIDIA AI and radio frameworks plus ARC-OTA to accelerate their 6G research (Table 1). Most commonly, these research groups presented how they are using AI for the complex and challenging problem of channel estimation and on how they are using ARC-OTA to close the “reality gap” between simulation and real-world OTA.

| Organization | Projects | NVIDIA tools used |

| Samsung | AI channel estimation Lab-to-field methodology Site-specific optimizations Close reality gap between sim and real-world OTA |

ARC-OTA, pyAerial, ADL, Sionna, SionnaRT, AODT in the future |

| ETH | NN PUSCH Deep-unfolding for iterative detector-decoder |

ARC-OTA, pyAerial, ADL, Sionna, SionnaRT, AODT in the future |

| NEU | x5G 8-node ARC-OTA testbed RIC and real-time apps (dApps) Deployment automation on OpenShift O-RAN intelligent orchestrator |

ARC-OTA, pyAerial, ADL, Sionna, SionnaRT, AODT |

| University of Oulu | Sub-THz | Sionna, ARC-OTA in the future |

| Keysight | Product development: Deterministic channel modeling for 6GAI-assisted channel modeling; Physical digital twin for 6G applications | AODT: GIS + mobility model + RT |

| Softbank | AI-for-RAN project: ML channel estimation and interpolation | ARC-OTA, pyAerial |

What’s next?

The 6G Developer Day is one of the channels to engage with the 6G research and development community and will become a feature of the NVIDIA event calendar. Check out the NVIDIA 6G Developer Day playlist to view all the sessions presented at the event on demand. Reference the NVIDIA Aerial FAQ based on the event Q&A for more information. To engage and connect with 6G researchers, join the NVIDIA 6G Developer Program.