WEKA, a pioneer in scalable software-defined data platforms, and NVIDIA are collaborating to unite WEKA’s state-of-the-art data platform solutions with powerful NVIDIA BlueField DPUs.

The WEKA Data Platform advanced storage software unlocks the full potential of AI and performance-intensive workloads, while NVIDIA BlueField DPUs revolutionize data access, movement, and security. The integration of these cutting-edge technologies is poised to usher in an unprecedented era of efficiency and speed in data management with the potential to reshape the landscape of high-performance data access.

Solving for efficient AI workflows

The rapid rise of AI is driving exponential growth in computing power and networking speeds, placing extraordinary demands on storage resources. While NVIDIA GPUs provide impressive levels of scalable, efficient computing power, they also require high-speed access to data.

The collaboration between WEKA and NVIDIA tackles this challenge. Together, they address the critical needs for high-bandwidth networked access to petabytes of data for model training and inferencing tasks, including retrieval-augmented generation (RAG).

The joint solution is tailored to handle the complexities of rich image and video data, vector databases, and extensive metadata preservation. This ensures seamless and efficient AI workflows, making the integration timely and essential for the future of data-driven innovation.

Enhancing throughput, latency, and security

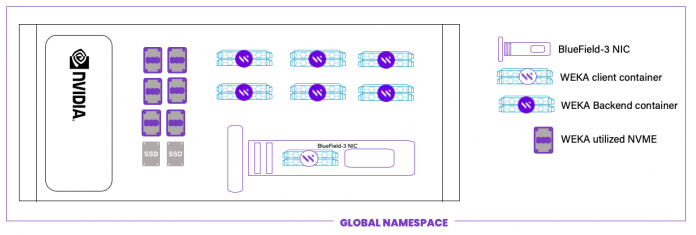

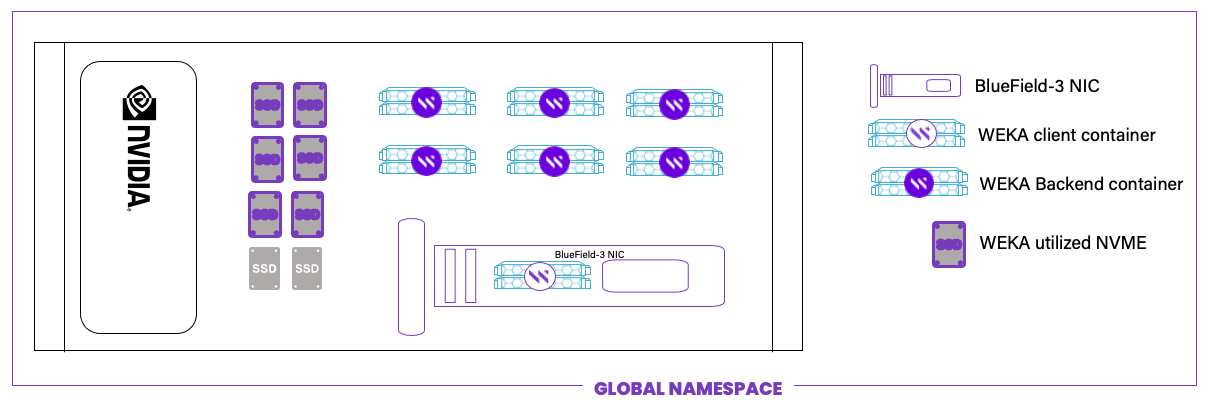

At the center of the collaboration is the integration of the WEKA client, complete with Virtio-FS code. It runs directly on the BlueField DPU, not on the host server’s CPU. This innovative approach offers several key advantages:

- Improved throughput: The BlueField hardware acceleration capabilities enable faster data transfer rates.

- Reduced latency: By running the WEKA client on the BlueField DPU, data access operations bypass the host CPU, significantly reducing latency.

- CPU offload: By moving the WEKA client to the DPU, valuable host CPU resources are freed for application processing, potentially improving overall system performance and efficiency.

- Enhanced security: Offloading storage operations to the DPU creates an additional isolation layer, enhancing overall system security.

The Virtio-FS code implementation facilitates seamless communication between the host system to networked data, enabling efficient file system operations without sacrificing performance. By running the WEKA client on the BlueField DPU, file system tasks are offloaded from the CPU, reducing overhead and freeing up to 20% of CPU capacity for applications.

This approach also ensures local file system efficiency in virtualized environments and cross-platform compatibility. Additionally, Virtio-FS is designed to adapt to evolving DPU technologies, with the NVIDIA DOCA software framework available to simplify future development processes and compatibility with new generations of NVIDIA BlueField DPUs.

Using Virtio-FS with NVIDIA BlueField DPUs combines the strengths of efficient, direct file sharing with powerful offloading and acceleration capabilities. This synergy enhances performance, reduces system complexity, and supports modern, scalable architectures ideal for AI workloads.

Hardware-accelerated data processing

Storage for AI training and inferencing presents unique challenges, each with distinct requirements. Training demands high throughput for large datasets and write-intensive operations, while inference requires exceptional read performance and low latency for real-time responsiveness. Both scenarios often rely on a shared filesystem. The NVIDIA BlueField DPU optimizes workloads for training and inferencing by offering hardware-accelerated data processing.

Optimizing for AI model training

AI model training places substantial demands on storage, requiring swift access to vast data pools to support GPU productivity. The training process involves periodic reads from very large data pools and also frequent continuous write operations like logging, saving checkpoints, and recording metrics. The BlueField DPU provides strong write performance and optimized read/write balancing, as well supplying high IOPS effectively.

Low latency and high read performance for inference

AI inference presents different storage demands, requiring swift access to small amounts of data from multiple sources to maintain low user response times. Low latency is crucial for real-time or near real-time processing, as delays can impact the responsiveness and effectiveness of the application. Inference often involves using multiple trained models and additional data sources to make quick predictions or decisions. BlueField DPU provides the fast read performance that is essential to keep data flowing smoothly, enabling accurate outputs for time-sensitive AI applications..

Balancing training and inference for AI performance and efficiency

The specific stresses differ slightly between training and inference. Balancing these requirements is critical in building efficient and resilient AI storage architectures, and crucial for creating effective and robust AI storage solutions. Integrating the WEKA data platform client with the NVIDIA BlueField DPU improves storage performance for both training and inference workloads and also enhances the efficiency and security of the solution.

Conclusion

The integration of running the WEKA client on the NVIDIA BlueField DPU facilitates file access from the WEKA file system to unlock the full potential of performance-intensive workloads and benefits data access, movement, and security.

At the Supercomputing 2024 conference, WEKA and NVIDIA showcased the practical benefits of the integrated solution with a live demonstration. Attendees witnessed accelerated AI data processing through improved data access speeds and efficient workload handling. Our expert team was available to answer questions and provide insights into how this solution can transform your data center operations.

Learn more about the collaboration between WEKA and NVIDIA: