MiniMax, a Singapore-based AI startup backed by Alibaba and Tencent, has unveiled a new series of AI models featuring record-breaking 4 million token context windows.

The release of MiniMax-Text-01 and MiniMax-VL-01 positions the company as a serious competitor to established players like OpenAI and Google, offering advanced capabilities for applications requiring sustained memory and extensive input handling.

The models, designed to handle tasks involving long documents, complex reasoning, and multimodal inputs, mark a leap forward in AI scalability and affordability. MiniMax’s announcement highlights its focus on AI agent development, addressing the growing demand for systems capable of extended context processing.

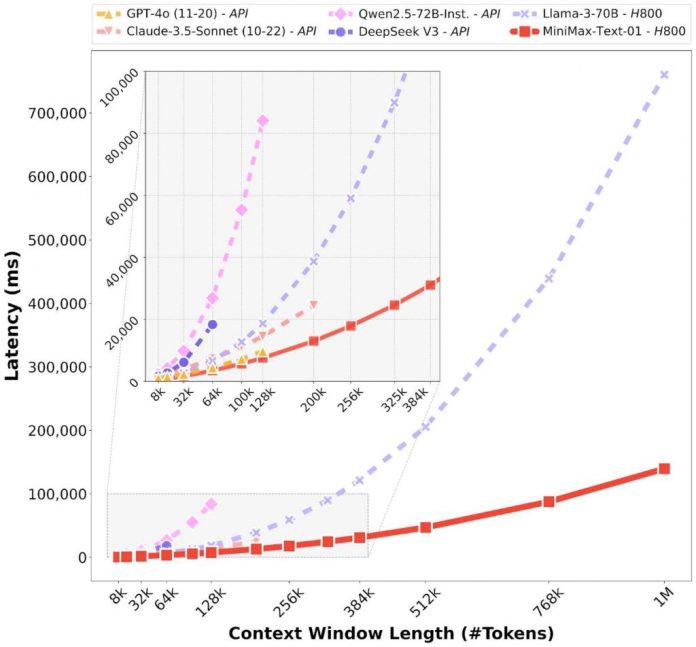

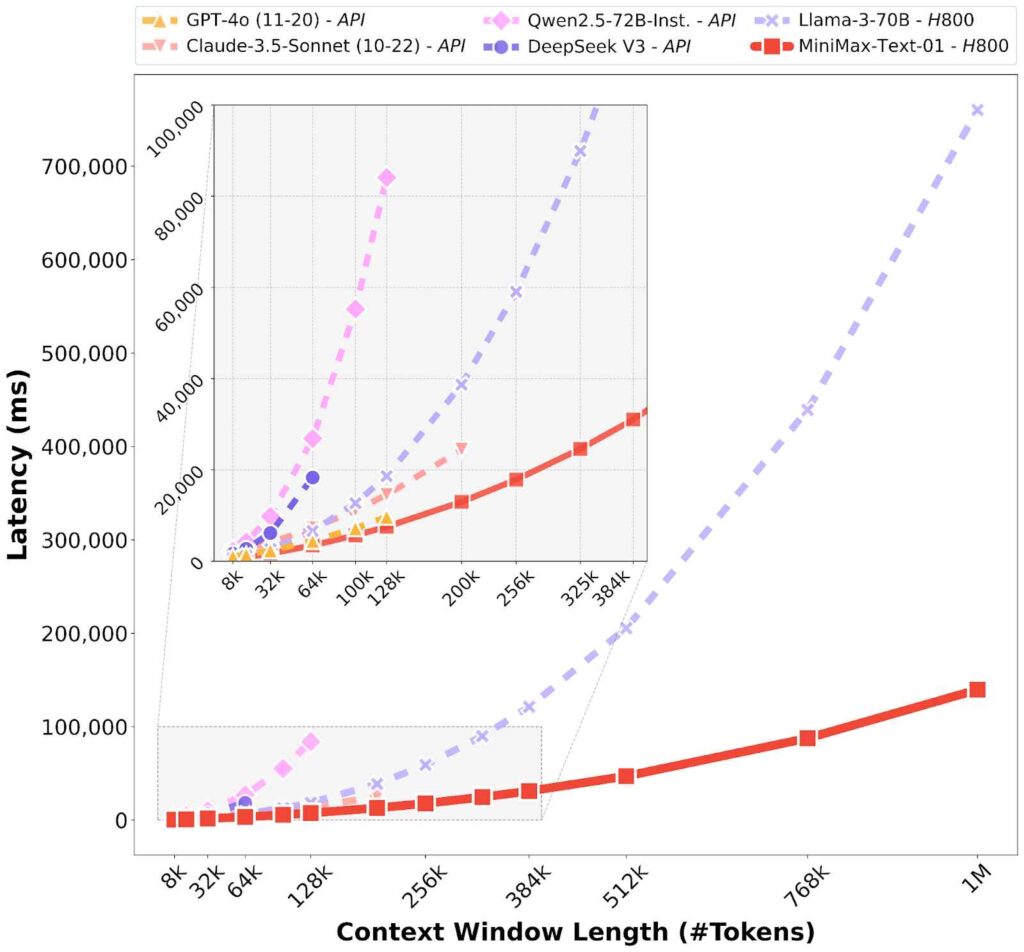

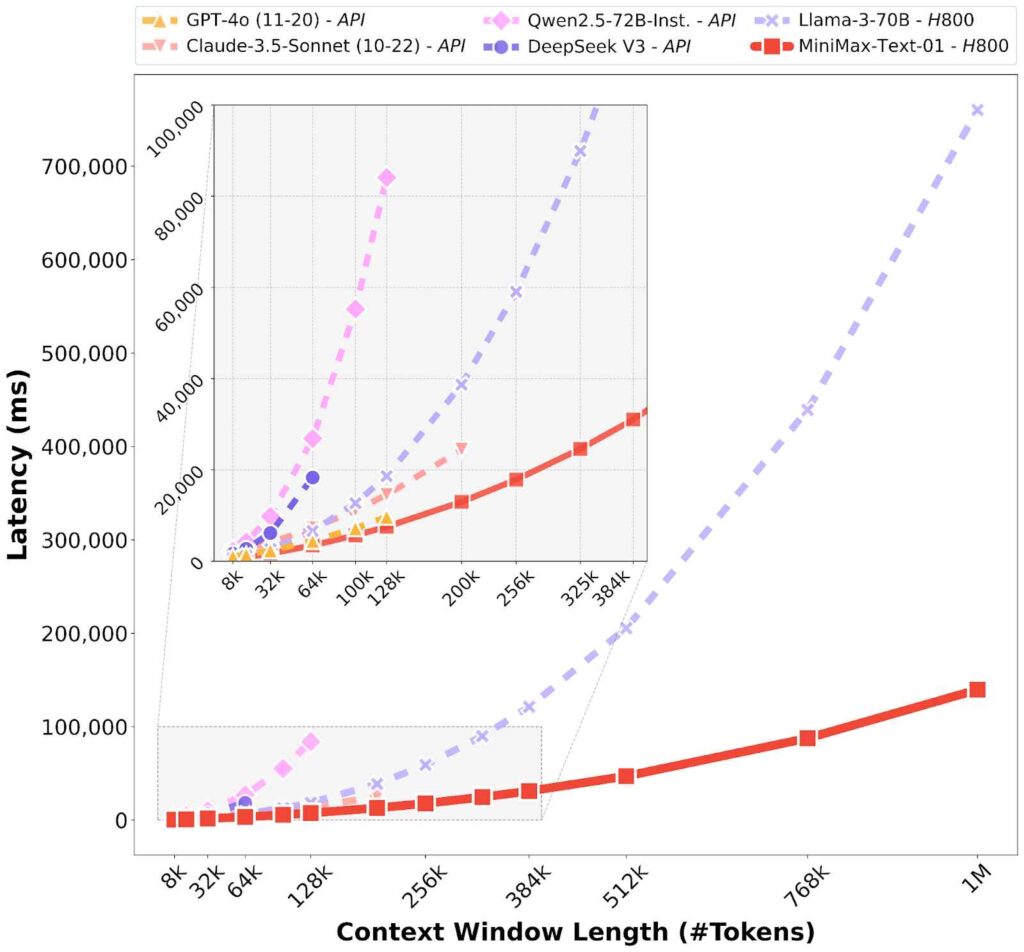

The MiniMax-Text-01 model features a total of 456 billion parameters, with 45.9 billion activated per token during inference. Designed for efficient long-context processing, it employs a hybrid attention mechanism that combines linear and SoftMax layers to optimize scalability. The model supports a context window of up to 1 million tokens during training, extending to an impressive 4 million tokens in inference.

Equipped with a lightweight Vision Transformer (ViT) module, the MiniMax-VL-01 model is tailored for multimodal applications. It processes an extensive 512 billion vision-language tokens using a structured four-stage training pipeline, ensuring robust performance in tasks requiring the integration of visual and textual data.

What 4 Million Tokens Mean for AI Development

The context window in AI models determines how much information they can process simultaneously, with each token representing a fragment of data such as a word or punctuation mark.

MiniMax-Text-01’s 4 million token capacity significantly surpasses industry standards, including OpenAI’s GPT-4 (32,000 tokens) and Google’s Gemini 1.5 Pro (2 million tokens).

According to MiniMax, this extended capacity allows their models to process volumes of data equivalent to several books in a single exchange.

The company stated on its X account, “MiniMax-01 efficiently processes up to 4M tokens—20 to 32 times the capacity of other leading models. We believe MiniMax-01 is poised to support the anticipated surge in agent-related applications in the coming year, as agents increasingly require extended context handling capabilities and sustained memory.”

MiniMax-01 is Now Open-Source: Scaling Lightning Attention for the AI Agent Era

We are thrilled to introduce our latest open-source models: the foundational language model MiniMax-Text-01 and the visual multi-modal model MiniMax-VL-01.

💪Innovative Lightning Attention… pic.twitter.com/LbJhhmxD4P

— MiniMax (official) (@MiniMax__AI) January 14, 2025

This capability opens doors for applications in fields such as research analysis, legal document processing, and AI-driven simulations, where handling large datasets is essential.

The Technology Behind MiniMax-01

At the heart of MiniMax’s new models lies its “Lightning Attention architecture”, a hybrid system combining linear and “SoftMax” attention layers. Unlike traditional transformer models, which scale computational complexity quadratically with input size, Lightning Attention achieves near-linear scalability, enabling efficient processing of long sequences.

Additionally, the models integrate a Mixture of Experts (MoE) framework, which consists of 32 sub-models, or “experts,” that are selectively activated depending on the task.

This design optimizes computational resources while maintaining high performance. Supporting technologies such as Varlen Ring Attention, which minimizes computational waste for variable-length sequences, and custom CUDA kernel optimizations further enhance the models’ scalability and efficiency.

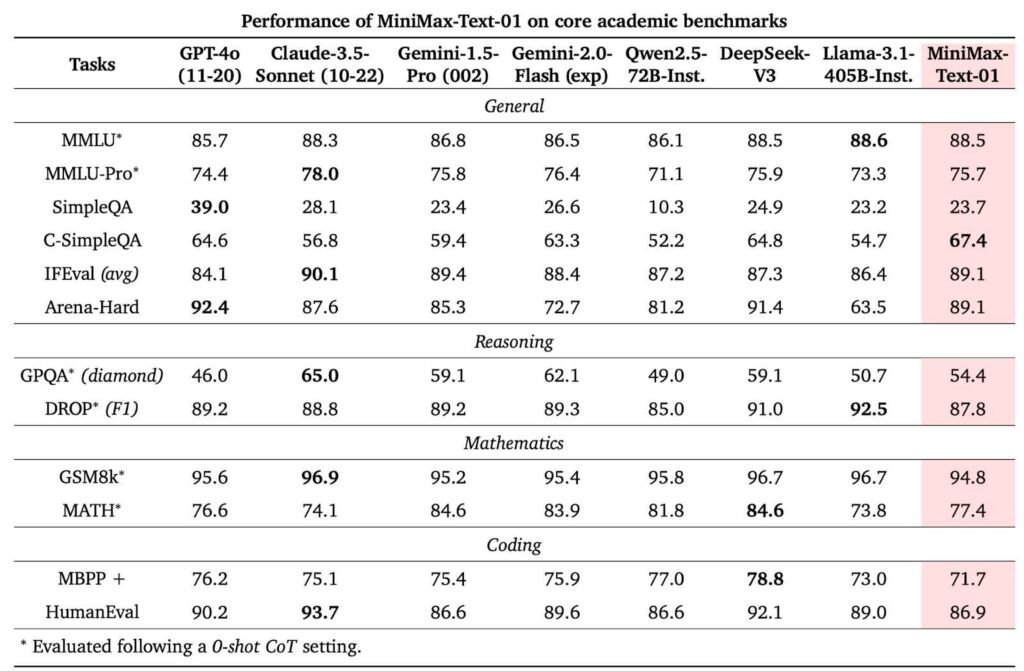

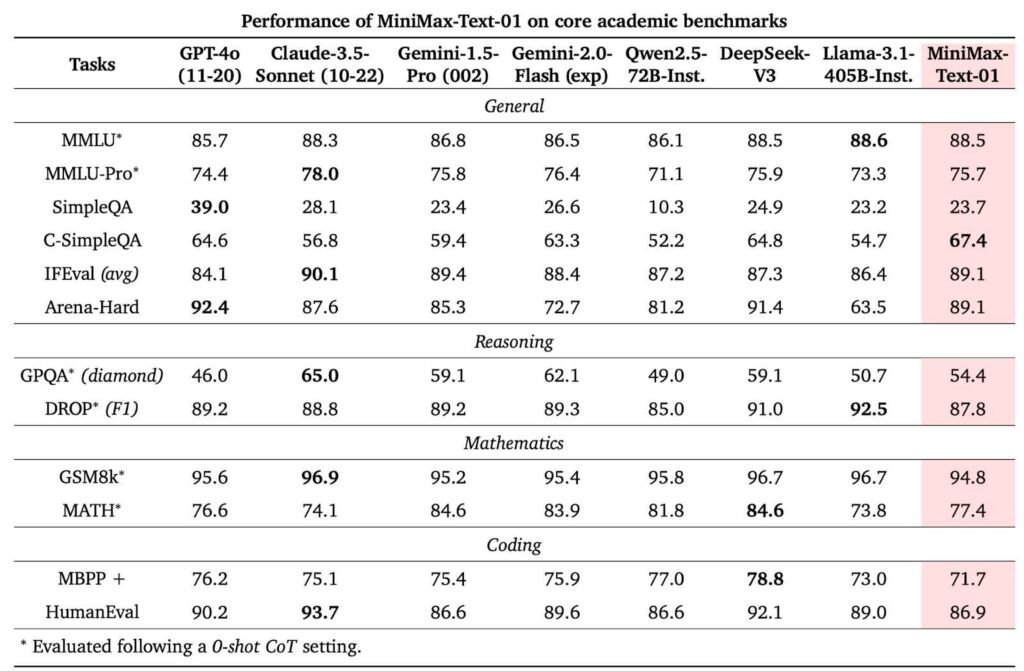

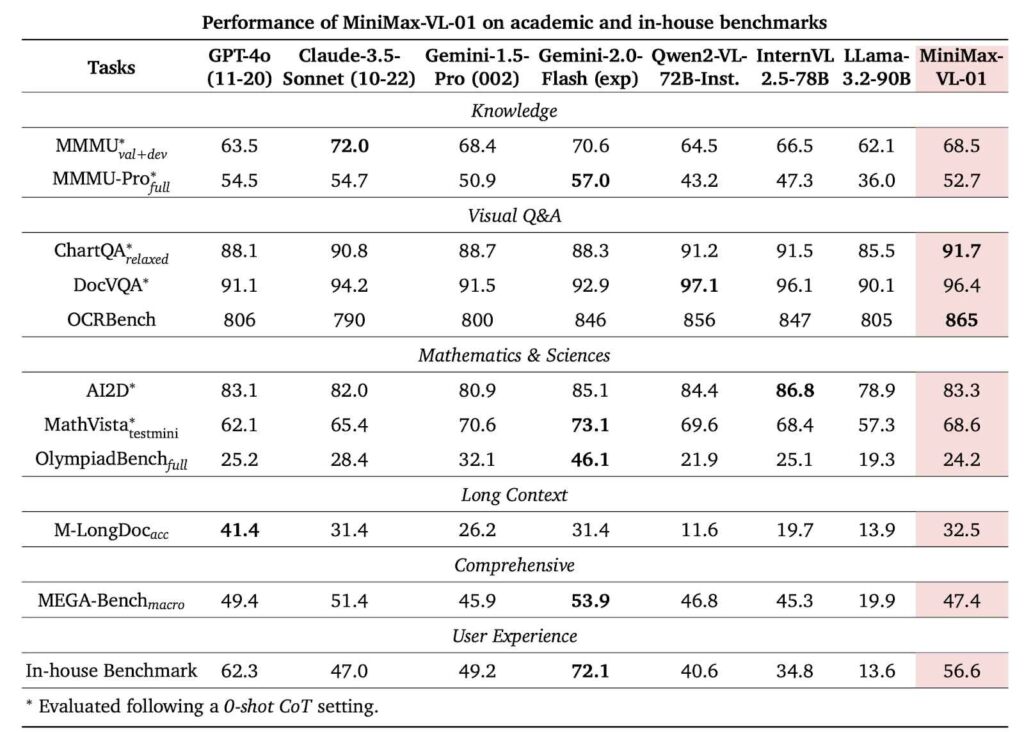

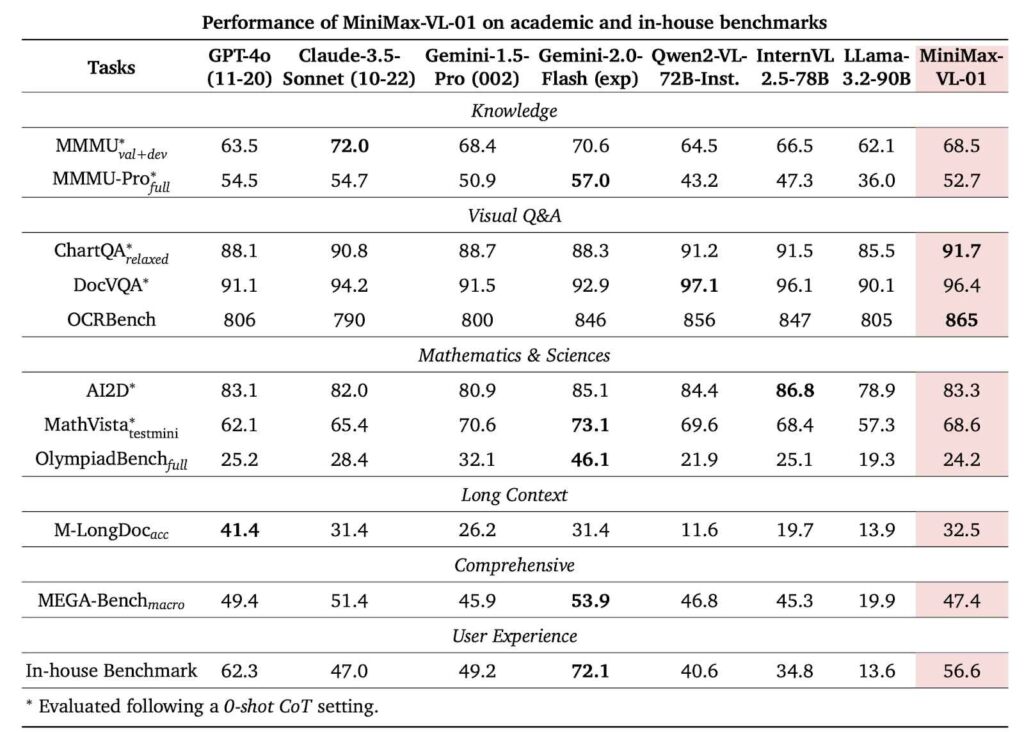

Benchmarks and Performance

Both MiniMax-01 models have demonstrated competitive results on industry-standard benchmarks. For example, MiniMax-Text-01 achieved 100% accuracy in the Needle-in-a-Haystack test with its extended context, matching Google’s Gemini 1.5 Pro.

On multimodal tasks, MiniMax-VL-01 excels in vision-language evaluations, with 96.4% accuracy on DocVQA and 91.7% on AI2D benchmarks.

Despite these achievements, experts caution that benchmarks like Needle-in-a-Haystack may not fully reflect real-world applications. Studies suggest that while large context windows are valuable, their effectiveness depends on how they are utilized, especially in tasks requiring retrieval-augmented generation (RAG).

Accessibility and Competitive Pricing

MiniMax has made its models available on platforms like GitHub and Hugging Face, as well as through its proprietary Hailuo AI platform.

Developers can also access them via API at highly competitive rates: $0.20 per million input tokens and $1.10 per million output tokens. This pricing significantly undercuts OpenAI’s GPT-4 API, which charges $2.50 per million input tokens.

However, MiniMax’s licensing includes restrictions. For instance, platforms with over 100 million monthly active users must obtain special permissions, and the models cannot be used to improve rival AI systems. These conditions may limit adoption among larger enterprises.

Ethical Challenges and Regulatory Context

MiniMax faces ongoing scrutiny regarding its use of copyrighted materials in training datasets. Chinese streaming service iQiyi has filed a lawsuit accusing the company of unauthorized use of its recordings, while MiniMax’s Talkie app, which featured AI-generated avatars of public figures, was removed from Apple’s App Store in December 2024 for unspecified violations.

These issues arise as U.S. export controls on AI technologies tighten. New regulations, announced by the Biden administration, aim to restrict the sale of advanced AI chips and technologies to Chinese companies. These measures could complicate MiniMax’s access to the hardware required to train and scale its models.

MiniMax in a Competitive AI Landscape

Founded in 2021 by former employees of SenseTime, MiniMax has rapidly expanded its portfolio, from text and multimodal models to video generators. Its Hailuo AI platform’s Video-01 model gained attention for its ability to generate realistic videos, particularly excelling in areas like human hand movements—a challenging aspect of video generation.

While MiniMax has positioned itself as a cost-effective alternative to industry giants, its ability to navigate legal challenges and regulatory hurdles will be pivotal to its continued growth.