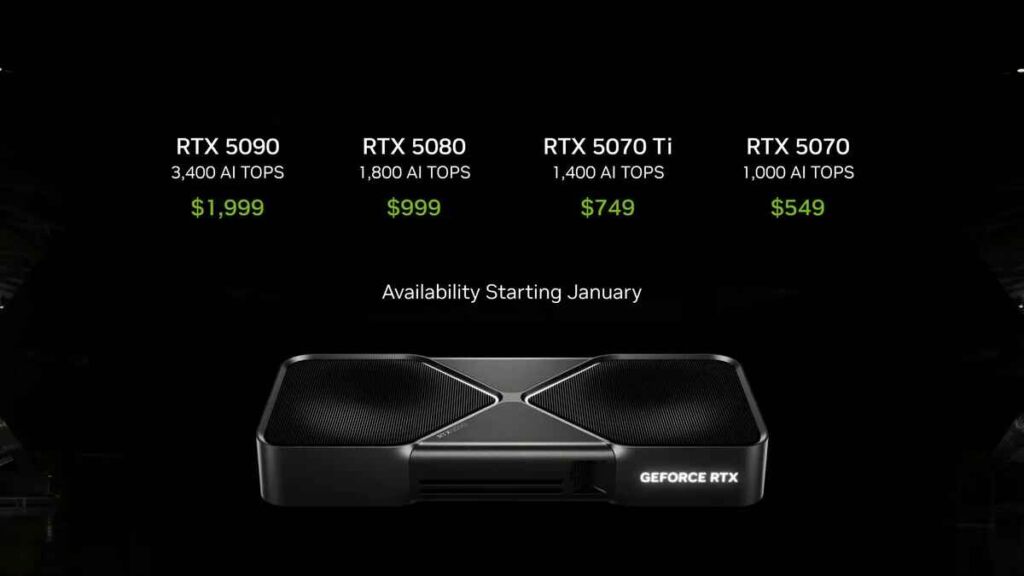

Nvidia has revealed its new RTX 50-series graphics cards at CES 2025, aiming to raise the bar in both gaming and AI-assisted performance. The lineup features four models: the flagship RTX 5090 at 1,999 US dollars, the RTX 5080 at 999 US dollars, the mid-tier RTX 5070 Ti at 749 US dollars, and the most accessible RTX 5070 at 549 US dollars.

The new GPUs are built on the Blackwell architecture and are scheduled to arrive on January 30 for the higher-tier models, with the remaining cards slated for release in February.

Related: Nvidia Unveils $3,000 Personal AI Supercomputer “Project Digits”

According to details shared during the keynote, Nvidia intends to offer a faster, more efficient alternative to its RTX 40 series by enhancing ray tracing, AI-focused features such as DLSS 4, and memory capabilities through GDDR7.

The Blackwell Architecture: Core Enhancements

The RTX 50 series marks the official transition to Blackwell, a design that emphasizes more robust AI performance and real-time ray-tracing potential. Based on information shown during the keynote, each GPU in this generation features revised CUDA core configurations, improved power distribution, and specialized hardware for tasks that require machine learning.

The company also replaced GDDR6X with GDDR7 in this lineup, giving the RTX 5090 a memory bandwidth of 1.8 terabytes per second. This high throughput is expected to expedite texture streaming and data transfers, especially for resource-intensive games and applications.

While comparisons to the RTX 40 series are ongoing, Nvidia’s stated focus is on delivering frames more quickly and handling AI workloads without bottlenecks in the rendering pipeline.

| GPU Model | Price | TGP(W) | CUDA Cores | Memory | MemBW | Boost Clock | RT Cores | Tensor Cores | PSU | Launch |

|---|---|---|---|---|---|---|---|---|---|---|

| RTX 5090 | $1,999 | 575 | 21,760 | 32GB GDDR7 | 1.8TB/s | 2.7GHz | 128 | 512 | 1,000W | Jan 30 |

| RTX 5080 | $999 | 360 | 10,752 | 16GB GDDR7 | 960GB/s | 2.5GHz | 96 | 384 | 850W | Jan 30 |

| RTX 5070 Ti | $749 | 320 | 8,192 | 12GB GDDR7 | 800GB/s | 2.3GHz | 64 | 256 | 750W | Feb |

| RTX 5070 | $549 | 300 | 7,680 | 12GB GDDR7 | 720GB/s | 2.1GHz | 56 | 224 | 700W | Feb |

Performance Claims and Power Draw

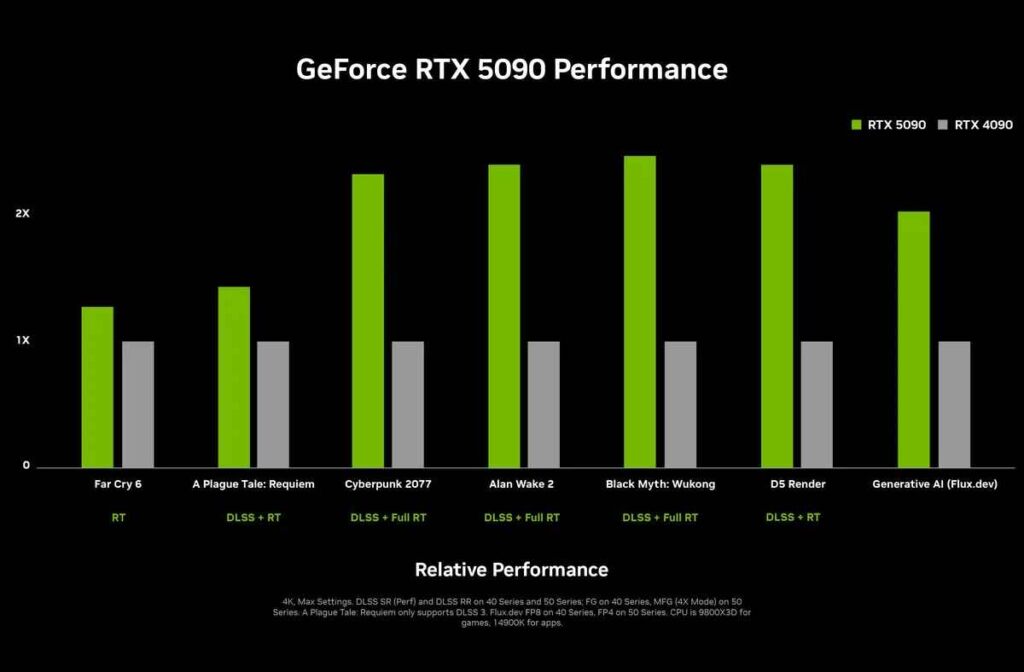

During the presentation, Nvidia showcased “Cyberpunk 2077” running on the RTX 5090 with DLSS 4 at a brisk 238 frames per second, while the RTX 4090 with DLSS 3.5 reportedly hit 106 frames per second under identical ray-traced conditions.

The RTX 5090 boasts 32 gigabytes of GDDR7 memory, 21,760 CUDA cores, and a total graphics power requirement of 575 watts, which means users may need a 1,000-watt power supply for uninterrupted operation.

Nvidia suggests that updated architecture refinements could help the card maintain lower real-world power consumption, although those claims await independent testing once the GPUs become available.

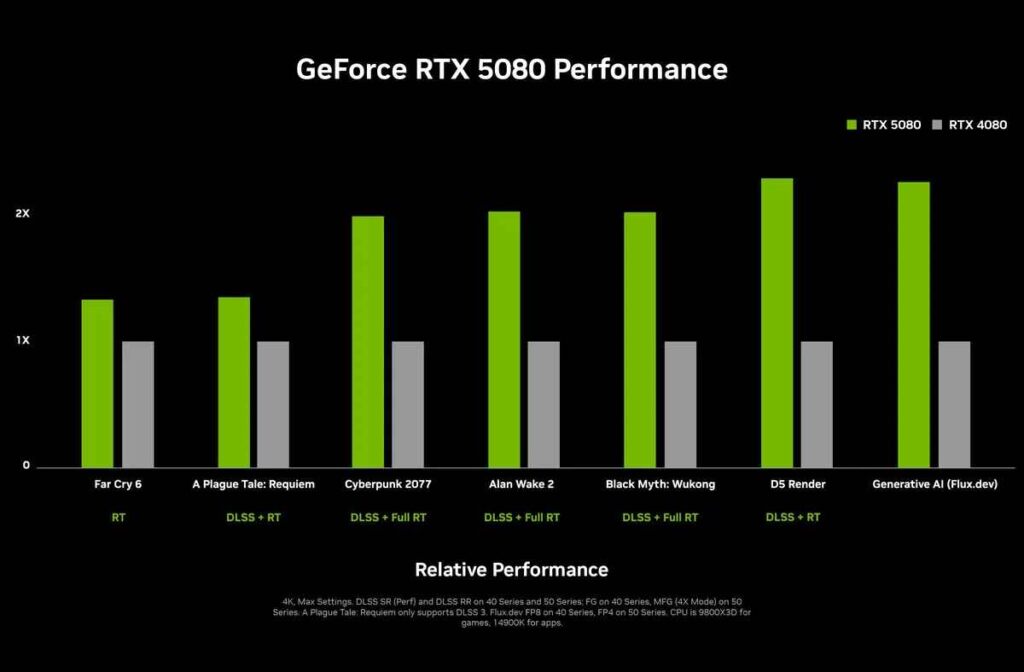

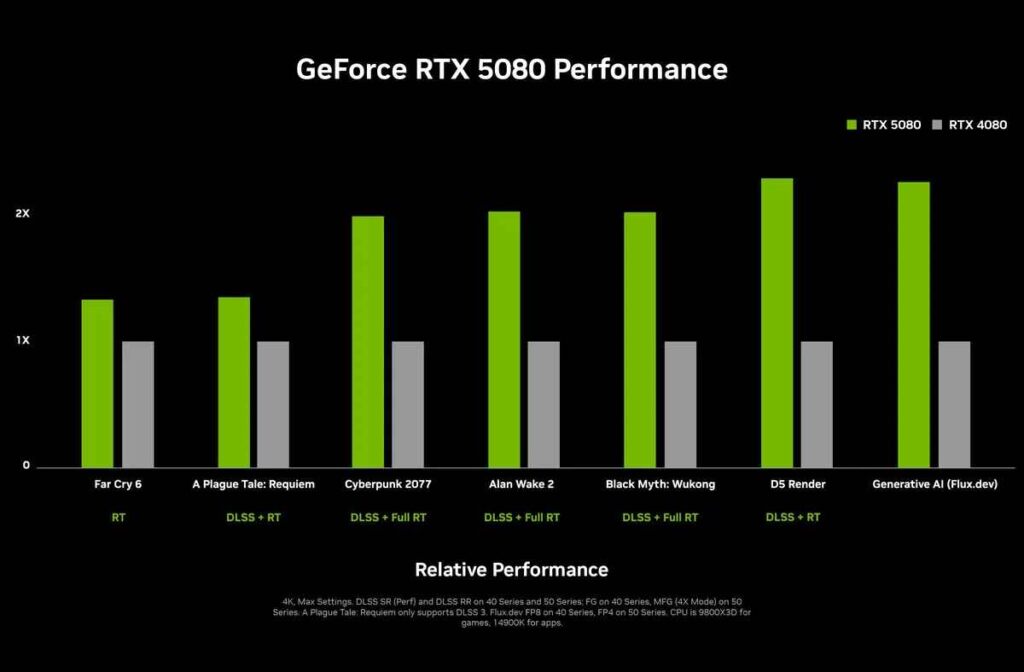

The RTX 5080 includes 16 gigabytes of GDDR7, with a total graphics power rated at 360 watts and a recommended 850-watt power supply. Nvidia refers to this card as delivering twice the performance of the RTX 4080 in both traditional rasterization and ray tracing tasks, aided by a memory bandwidth of 960 gigabytes per second and 10,752 CUDA cores.

Referencing its own measurements, the company stated in official notes that “the RTX 5080 delivers faster frame rates than the RTX 4080 across key titles and benchmarks.”

DLSS 4 and the Promise of AI-Based Rendering

One of the central features of the RTX 50 line is DLSS 4, the newest evolution of Nvidia’s Deep Learning Super Sampling technology. Unlike earlier versions, which primarily upscaled lower-resolution images and reconstructed details, DLSS 4 is designed to predict and generate additional frames by leveraging more AI resources on the GPU.

This approach can greatly reduce visual latency and boost average frames per second, particularly at high resolutions and with ray tracing turned on.

Though hardware specifications are not the sole factor in performance—driver support and application optimizations also play key roles—Nvidia’s concept for DLSS 4 points toward a future where AI routines handle much of the heavy lifting in modern graphics engines.

Nvidia also highlighted Neural Texture Compression (NTC) as an example of how AI may reshape rendering workflows. NTC applies a neural network to texture data in real time, shrinking file sizes and memory footprints while attempting to preserve visual detail.

With the RTX 5070 using 12 gigabytes of GDDR7, a feature like NTC could allow that lower-priced card to run demanding titles more smoothly than similar mid-range GPUs from previous generations.

However, widespread adoption of NTC depends on compatibility and support from studios and developers, many of whom still tailor their releases to accommodate AMD hardware in major gaming consoles.

Form Factor and Founders Edition Design

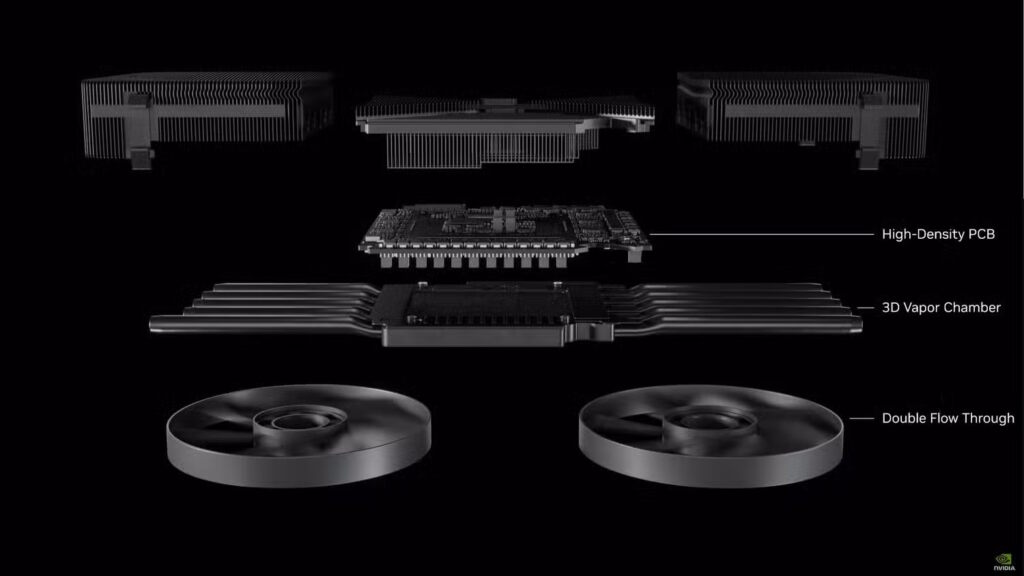

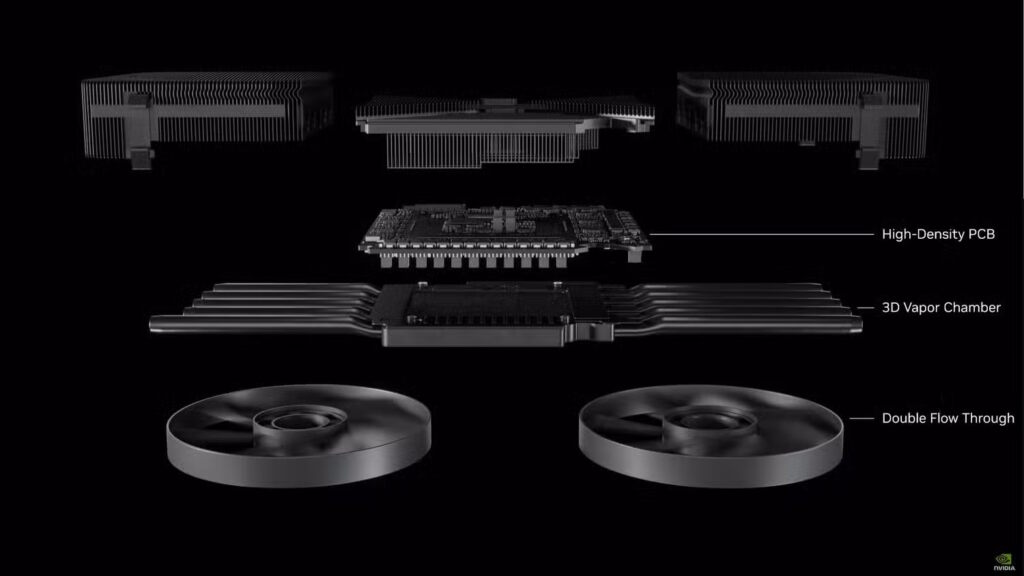

Beyond raw specifications, Nvidia is emphasizing a new two-slot form factor for the RTX 5090 Founders Edition, a departure from the larger triple-slot cooler found in the RTX 4090. The company suggests that smaller cases and tighter builds can now house top-tier graphics, catering to PC enthusiasts who want maximum power without sacrificing space.

Cooling solutions rely on a dual-fan, double flow-through system with an integrated vapor chamber. According to official materials from the CES 2025 event, the firm states that “All of the RTX 50-series cards are PCIe Gen 5 and include DisplayPort 2.1b connectors to drive displays up to 8K and 165Hz.”

This connectivity upgrade gives gamers and content creators the option to push high pixel counts and refresh rates simultaneously, though the hardware demands on the GPU remain substantial.

The cost of these power and design advantages may be heightened complexity in power delivery, as evidenced by the recommended 1,000-watt PSU for the flagship model. While the 5090 operates at 575 watts at peak, the 5070 Ti and 5070 occupy lower power brackets at 300 to 350 watts.

Enthusiasts considering an upgrade must weigh the total system draw and evaluate their cooling setups to ensure stable performance when running GPU-intensive tasks.

The convenience of a two-slot card does not eliminate thermal and acoustic concerns but indicates that Nvidia is working to keep external dimensions and overall heat dissipation requirements balanced.

Laptop GPUs and Extended Market Impact

Nvidia has also introduced mobile versions of the RTX 50 series, aiming to bring desktop-level performance to laptops. These variants are scheduled to launch in March 2025, targeting users who require robust computing on the go, such as content creators, data analysts, and professionals dealing with intensive graphical workloads.

The company claims the laptop-grade RTX 5090 can achieve up to 1,850 AI TOPS, approaching the desktop RTX 5080’s capabilities, though power and thermal constraints in portable devices remain significant factors. Prices for the top-tier notebook options will start at 2,899 US dollars, which positions them firmly in the realm of high-end mobile workstations and gaming laptops.

Nvidia suggests that the improvements in Blackwell’s efficiency, combined with refined thermal management, help these GPUs deliver advanced rendering tasks in smaller form factors. Whether these claims hold true under heavy loads, such as prolonged 3D rendering or large-scale AI inferences, will rely on how well manufacturers implement Nvidia’s reference designs and ensure adequate cooling.

AI Application Beyond Gaming

While the spotlight at CES 2025 fell on the RTX 5090 and its ability to accelerate popular AAA titles, Nvidia also emphasized the potential for AI-based workflows across multiple industries. Enhanced tensor cores in the Blackwell architecture boost deep learning tasks, accelerating processes such as natural language modeling, image classification, and complex simulations.

This newfound power could benefit sectors like robotics, medical imaging, and cinematic production, where large datasets and real-time inference are crucial to overall productivity.

In official notes, the company wrote, “We designed Blackwell for superior performance across a range of AI and ray-tracing workloads, unlocking new possibilities for creators and professionals.”

Critics and analysts outside Nvidia point out that while these capabilities can drive forward AI research and commercial applications, they require corresponding software support from developers and researchers to see widespread implementation. By merging ray tracing, AI-based texture compression, and robust hardware for inference, the RTX 50 series aspires to extend beyond gaming benchmarks and serve as a platform for advanced computing tasks.

Comparisons and Market Outlook

As in previous hardware generations, Nvidia’s new offerings will inevitably be compared to both its own last-generation products and upcoming GPUs from competitors. Some gamers may question whether the jump from an RTX 4090 to an RTX 5090 justifies its higher power draw and steeper price.

Conversely, others will consider the substantial performance gains, especially if they are invested in bleeding-edge gaming at 4K or 8K resolutions with ray tracing enabled. Meanwhile, the 999-dollar RTX 5080 appears to inherit the price point of earlier high-end Nvidia cards, possibly positioning it as an upper-tier favorite for enthusiasts who desire high frame rates without fully stretching to the 5090’s cost.

However, Nvidia’s positioning of the 5070 Ti and the 5070 might stir interest among users who want to access many of the same Blackwell and DLSS 4 benefits at lower entry costs. Even so, questions remain about supply levels, potential third-party card variants, and driver support once these GPUs hit the market in larger numbers.

Nvidia acknowledged that exact performance outcomes will depend on software optimizations and continued adoption of AI-based rendering. Commenting on the refined tensor cores and new rendering pipeline, a company spokesperson stated, “DLSS 4 and Neural Texture Compression reflect our commitment to moving beyond traditional approaches to pixel rendering, allowing the GPU to handle tasks that were previously impossible in real time.”

Industry watchers anticipate that as these features mature, consumers might see updates to game engines that tap into the full suite of RTX 50 hardware advantages. The timing of AMD’s upcoming announcements could also shape how quickly Nvidia’s new cards gain momentum.

Rivalry between the two major GPU suppliers historically influences developer focus, driver readiness, and performance benchmarks, making the next few months a focal point for the high-end graphics market.

Consumer Considerations and Future Prospects

For individuals and businesses contemplating a GPU upgrade, the RTX 50 series offers a new level of potential in both gaming and AI-driven workloads. However, the ecosystem’s success hinges on more than raw computing power.

Developers must integrate features like DLSS 4 and Neural Texture Compression into their titles, and creative professionals may need to adapt existing pipelines to harness AI-based texture handling or accelerated rendering. User readiness to handle power and cooling challenges is another factor.

The high wattage of the RTX 5090 especially suggests that not every PC case or power supply can accommodate it without modifications. The slimmed-down designs may allow these cards to slot into a broader range of systems, but additional steps such as ensuring robust airflow and proper PSU ratings remain imperative.

Although gaming headlines currently dominate the public discussion, experts note that Nvidia’s continued attention to enterprise applications solidifies its presence in data centers and professional markets. This helps position the company’s hardware as a widely supported standard for tasks that blend machine learning with graphics acceleration.

Some believe that with enough support from developers and the open-source community, the AI-driven techniques that begin in top-tier gaming GPUs could filter down to mainstream PCs and even mobile devices over time. The path, however, involves cultivating collaborations across hardware, software, and content creation, which can be a time-consuming and resource-intensive process.

Global Competition and Potential Challenges

Nvidia’s RTX 50 series arrives amid an environment where other GPU vendors are set to introduce their own products in the high-performance sector. Some of Nvidia’s biggest competition may stem from AMD’s upcoming RDNA releases and any discrete graphics efforts by Intel.

Observers predict that competitive pricing, driver improvements, and strategic partnerships with game developers will play a major role in determining the RTX 50 series’ market reception. While Nvidia’s deep ties to AI research, data center hardware, and cloud computing providers give it an edge, the company must still convince developers to implement technologies like DLSS 4 and Neural Texture Compression.

Cautious buyers often wait for performance reviews, driver updates, and proven game support before committing to a premium GPU, especially in a price range that often exceeds the cost of an entire mid-range gaming PC.

Nvidia has also emphasized that its collaborations with publishers, engine creators, and major studios underscore the shift toward AI-assisted workflows. These alliances can speed up how quickly developers integrate features that tap into RTX 50 hardware.

At CES 2025, various tech demonstrations hinted at the possibility of new lighting models, automated animation pipelines, and advanced physics simulations that rely heavily on AI computing. Whether such possibilities see rapid adoption or remain niche additions depends on marketing, developer enthusiasm, and practical performance gains in real-world scenarios.

Enterprise and Professional Use Cases

Outside consumer gaming, Nvidia has made strides in specialized markets such as 3D animation, scientific visualization, and cloud data centers. The Blackwell architecture’s expanded AI TOPS may accelerate deep learning tasks in research laboratories and enterprise environments.

Tasks like real-time protein folding simulations, high-resolution medical imagery, or predictive financial modeling can benefit from the GPU’s increased parallelism and memory bandwidth. Some of these areas already employ professional-grade Nvidia cards with specialized drivers, like the Quadro or Tesla series, but the RTX 50 architecture could trickle down into professional segments more quickly if developers seek to unify codebases for gaming, AI, and data analytics.

For smaller studios or freelancers, the option of a consumer-level GPU providing near workstation performance might reduce hardware costs or streamline production workflows.

Nonetheless, the core challenge remains adaptation of software pipelines to leverage AI-accelerated features. For instance, advanced ray tracing or data-driven rendering can place new demands on artists, coders, and project managers.

They must be prepared to rethink how content is generated, how textures are stored, and how various libraries interface with GPU drivers. Nvidia’s track record of releasing SDKs and collaborating on open standards, such as Vulkan and OpenGL improvements, may help ensure broader support.

Critics, however, remain watchful of how proprietary components or licensing terms might limit adoption of these cutting-edge features, particularly among open-source communities.

Potential Software Ecosystem Shifts

The integration of AI in core gaming and professional workflows paves the way for development studios to adopt or refine advanced techniques. Some studios are exploring generative content creation, in which an AI algorithm assists with designing levels, characters, or story elements.

Others see the potential for real-time content adaptation, personalizing visuals or difficulty settings based on player input. These endeavors typically require GPUs that can handle large inference or training tasks on the fly, an area where the Blackwell architecture may prove advantageous.

As evidenced by Nvidia’s demonstration of Cyberpunk 2077 surpassing 200 frames per second under ray tracing, real-time machine learning can lift performance ceilings that previously constrained game designers. From a consumer standpoint, the increased involvement of AI in rendering may raise questions about the authenticity of visuals.

Frame generation, for example, can add input lag or introduce artifacts if not properly optimized. Nvidia claims it has improved these algorithms to minimize disruptions, but user feedback and critical reviews will likely shape how frequently players turn on these new features.

If the technology delivers stable results with minimal drawbacks, it could be viewed as a major development in tackling the rendering challenges of 4K and 8K gameplay.

Upcoming Benchmarks

While Nvidia’s official demonstrations at CES 2025 paint the RTX 50 series as a milestone in consumer GPUs, independent reviewers will soon scrutinize real-world performance across a variety of use cases. Benchmarking tools, including synthetic tests and in-game benchmarks, will help assess whether the RTX 5090 truly achieves a twofold performance increase over the RTX 4090.

Price-sensitive gamers might also look carefully at metrics for the RTX 5070, as claims of “RTX 4090-like performance” for under 600 US dollars could reshape mid-range expectations. Another factor is driver maturity: new GPU architectures can encounter unexpected bugs or feature limitations at launch, so Nvidia’s support schedule will be critical to ensuring smooth adoption.

Hardware enthusiasts, streamers, and professional content creators are already discussing the possibility of integrating the Blackwell-based cards into multi-GPU workstations for faster rendering or machine learning tasks.

Though Nvidia has steadily moved away from official SLI support for gaming, creative applications may still see gains by using multiple GPUs in parallel via frameworks like CUDA or proprietary render managers.

However, power draw, heat dissipation, and rising component costs can make such configurations expensive to maintain. As a result, the conversation extends beyond raw performance to include questions about potential trade-offs in power bills, noise levels, and PC footprint.

The arrival of the RTX 50 series illustrates the growing intersection of gaming technology and AI-based innovation. By equipping consumer GPUs with robust hardware features like tensor cores tailored for real-time machine learning, Nvidia is nudging the industry toward a future where algorithmic optimizations guide how games, animations, and simulations are rendered. The transition to GDDR7 aligns with a need for higher bandwidth, reflecting the demands of increasingly complex graphics workloads and the appetite for larger-scale data in AI routines.

The company’s decision to offer a two-slot form factor on even its top-tier RTX 5090 card also suggests an ongoing effort to streamline the physical presence of high-end GPUs without compromising on raw performance. It remains to be seen if these boards will fulfill the heightened expectations Nvidia has set, but many in the PC hardware community are eagerly awaiting independent tests to confirm or challenge the official statements.

Even with the greater power demands of the RTX 5090, other models like the RTX 5080, 5070 Ti, and 5070 could capture a wide spectrum of users.