Agentic AI workflows often involve the execution of large language model (LLM)-generated code to perform tasks like creating data visualizations. However, this code should be sanitized and executed in a safe environment to mitigate risks from prompt injection and errors in the returned code. Sanitizing Python with regular expressions and restricted runtimes is insufficient, and hypervisor isolation with virtual machines is development and resource intensive.

This post illustrates how you can gain the benefits of browser sandboxing for operating system and user isolation using WebAssembly (Wasm), a binary instruction format for a stack-based virtual machine. This increases the security of your application without significant overhead.

One of the recent evolutions in LLM application development is exposing tools—functions, applications, or APIs that the LLM can call and use the response from. For example, if the application needs to know the weather in a specific location, it could call a weather API and use the results to craft an appropriate response.

Python code execution is a powerful tool for extending LLM applications. LLMs are adept at writing Python code, and by executing that code, they can execute more advanced workflows such as data visualization. Extended with Python function calling, a text-based LLM would have the ability to generate image plots for users. However, it’s difficult to dynamically analyze the LLM-generated Python to ensure it meets the intended specification and doesn’t introduce broader application security risks. If you find yourself executing LLM-generated Python to extend your agentic application, this post is for you.

Structuring the agent workflow

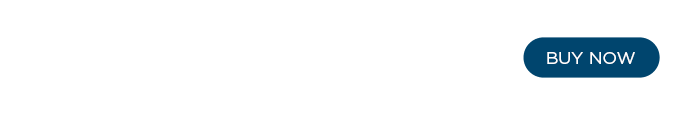

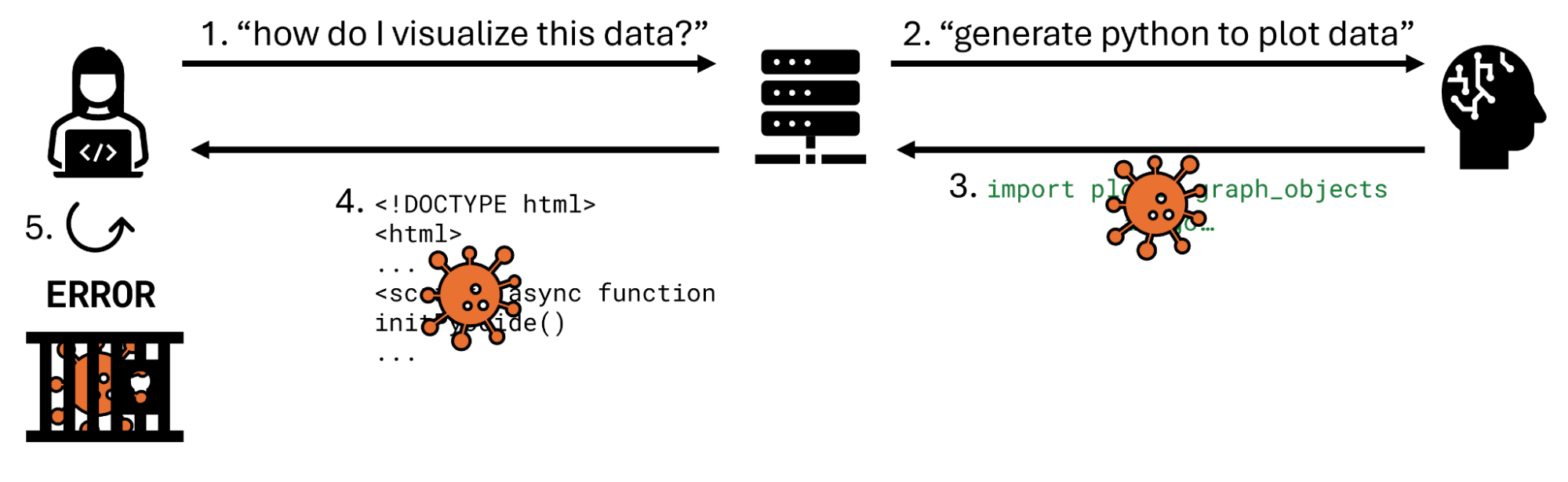

In the simplest agentic workflow, your LLM may generate Python that you eventually pass to eval. For example, a prompt equivalent to generate the python code to make a bar chart in plotly would return import plotly.graph_objects as go\n\nfig = go.Figure(data=go.Bar(x=["A", "B", "C"], y=[10, 20, 15]))\nfig.update_layout(title="Bar Chart Example"). Your agent would pass this into eval to generate the plot as shown in Figure 1.

In Step 1, the user provides their prompt to the application. In Step 2, the application provides any additional prompt context and augmentation to the LLM. In Step 3, the LLM returns the code to be executed by the tool-calling agent. In Step 4, that code is executed on the host operating system to generate the plot, which is returned to the user in Step 5.

Notice that the eval is performed on the server in Step 4, a significant security risk. It is prudent to implement controls to mitigate that risk to the server, application, and users. The easiest controls to implement are at the application layer, and filtering and sanitization are often done with regular expressions and restricted Python runtimes. However, these application-layer mitigations are rarely sufficient and can usually be bypassed.

For instance, a regular expression may attempt to exclude calls to os but miss subprocess or not identify that there are ways to eventually reach those functions from various dependency internals. A more robust solution might be to only execute the LLM-generated Python in a micro virtual machine like Firecracker, but this is resource and engineering intensive.

As an alternative, consider shifting the execution into the user’s browser. Browsers use sandboxes to isolate web page code and scripts from the user’s infrastructure. The browser sandbox is what prevents webpages from accessing local filesystems or viewing webcams without authorization, for example.

Using Python in the browser

Pyodide is a port of CPython into Wasm to create a sandbox that may be used inside existing JavaScript virtual machines. This means that we can execute Python client-side to inherit all of the security benefits of a browser sandbox.

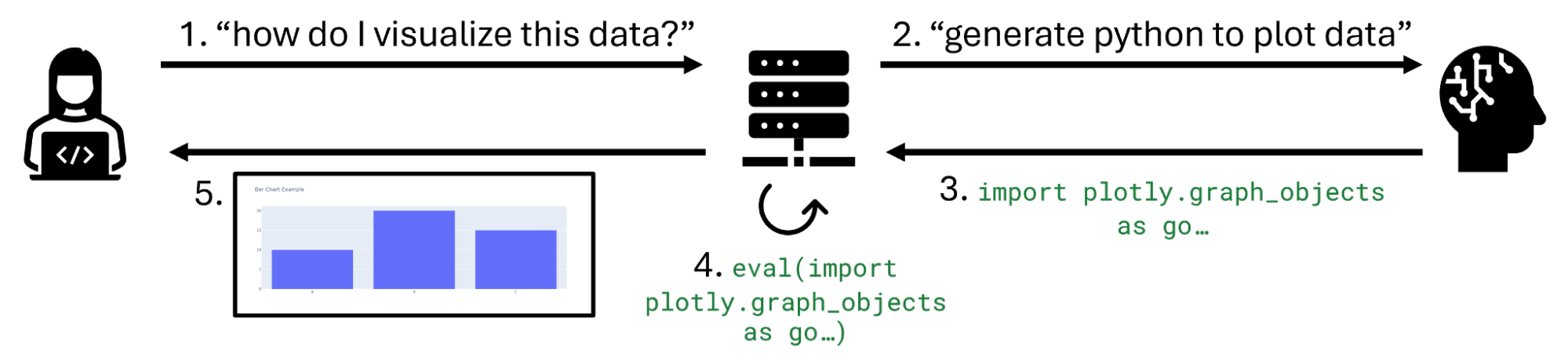

By designing the application to serve HTML with the Pyodide runtime and the LLM-generated code as shown in Figure 2, application developers can shift the execution into the users’ browsers, gaining the security of sandboxing and preventing any cross-user contamination.

The main difference in this architecture is that instead of executing the tool on the application server, the application instead returns HTML to the user in Step 4. When the user views that HTML in their browser, Pyodide executes the LLM-provided Python in the sandbox and renders the visualization.

This modification should require minimal change in prompting strategies, as the LLM-generated code can usually be templated into a static HTML document. For example, this function takes the LLM-provided code, and prepares it before dispatching it for execution with executeCode.

window.runCode = async (LLMCode) => {

try {

console.log('Starting code execution process...');

const pyodide = await initPyodide();

const wrappedCode = [

'import plotly.graph_objects as go',

'import json',

'try:',

' ' + LLMCode.replace(/\\n/g, '\\n '),

' if "fig" in locals():',

' plotJson = fig.to_json()',

' else:',

' raise Exception("No \\'fig\\' variable found after code execution")',

'except Exception as e:',

' print(f"Python execution error: {str(e)}")',

' raise'

].join('\\n');

await executeCode(pyodide, wrappedCode);

...

If your code has Python dependencies (like Plotly), you can install them with micropip in the client-side Javascript. Micropip supports python wheels from PyPI, including many with C extensions.

await pyodide.loadPackage("micropip");

const micropip = pyodide.pyimport("micropip");

await micropip.install('plotly');

Improving application security with Wasm

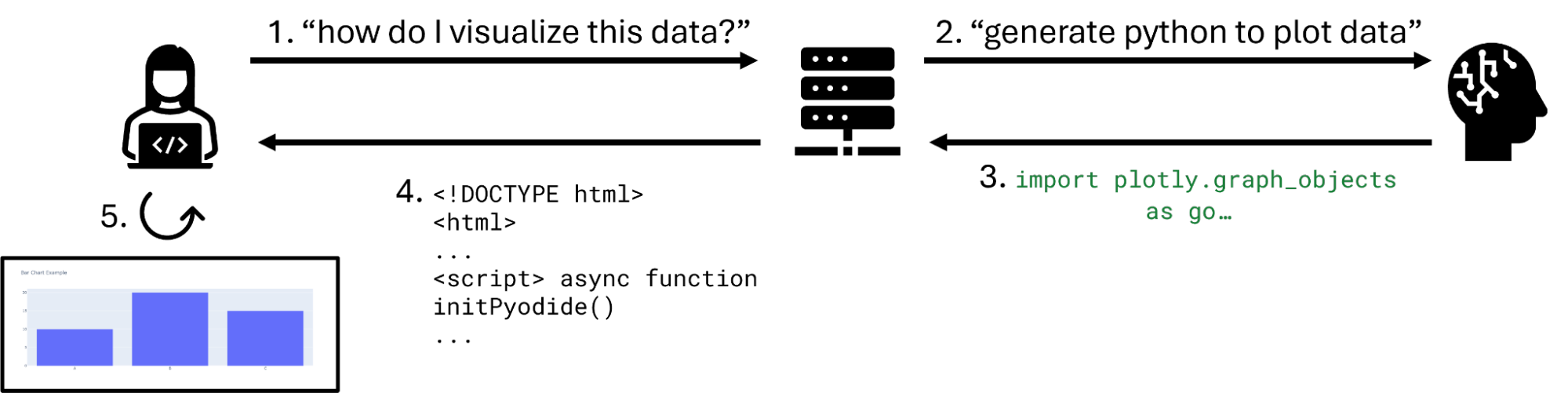

Imagine a scenario where the LLM returns malicious code, either as a result of prompt injection or error. In the case of the simplest agentic workflow, the call to eval results in the compromise of the application, potentially impacting the host operating system and other users as shown in Figure 3.

However, after applying the Wasm flow, there are two possible cases illustrated in Figure 4. First, the application may throw an error because the malicious Python code cannot be executed in the narrowly scoped Pyodide runtime (that is, a missing dependency).

Error: Traceback (most recent call last): File

"/lib/python311.zip/_pyodide/_base.py", line 499, in eval_code

.run(globals, locals) ^^^^^^^^^^^^^^^^^^^^ File

"/lib/python311.zip/_pyodide/_base.py", line 340, in run coroutine =

eval(self.code, globals, locals) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File

"<exec>", line 2, in <module> ModuleNotFoundError: No module named

'pymetasploit3'

Second, if the code does execute, it is restricted to the browser sandbox which greatly limits any potential impact to the end user’s device.

In either case, using Pyodide improves security controls for the querying user while reducing risk to application resources and adjacent users.

Get started

Sandboxing LLM-generated Python with WebAssembly offers a convenient approach, requiring minimal changes to existing prompts and architectures. It is cost-effective by reducing compute requirements, and provides both host and user isolation with improved security of the service and its users. It is more robust than regular expressions or restricted Python libraries, and lighter weight than containers or virtual machines.

To get started improving application security for your agentic workflows using Wasm, check out this example on GitHub. Learn more about AI agents and agentic workflows.